Product planning for machine learning

What you should know in order to plan machine-learning-based solutions.

By Juan Hernandez and Arthur Xu

When it comes to applied machine learning, the algorithm is often what captures the popular imagination. Most machine learning articles highlight the method to derive the model rather than the operating conditions needed to use the model successfully. However, the model is just one piece of a deeper system. In a company context, an algorithm’s leverage comes from being a part of large-scale running processes that ultimately serve a customer need. Moving an ML-based solution from a personal development environment into an production system introduces additional factors, ones that organizations often overlook in the rush to capitalize on ML.

We recognize that ML is a versatile tool. But, like any tool, its effectiveness is tied to the operational environment and problem context. This blog post covers the factors that product and process managers should know in order to plan for ML-based solutions well.

How ML fits into product strategy and automation

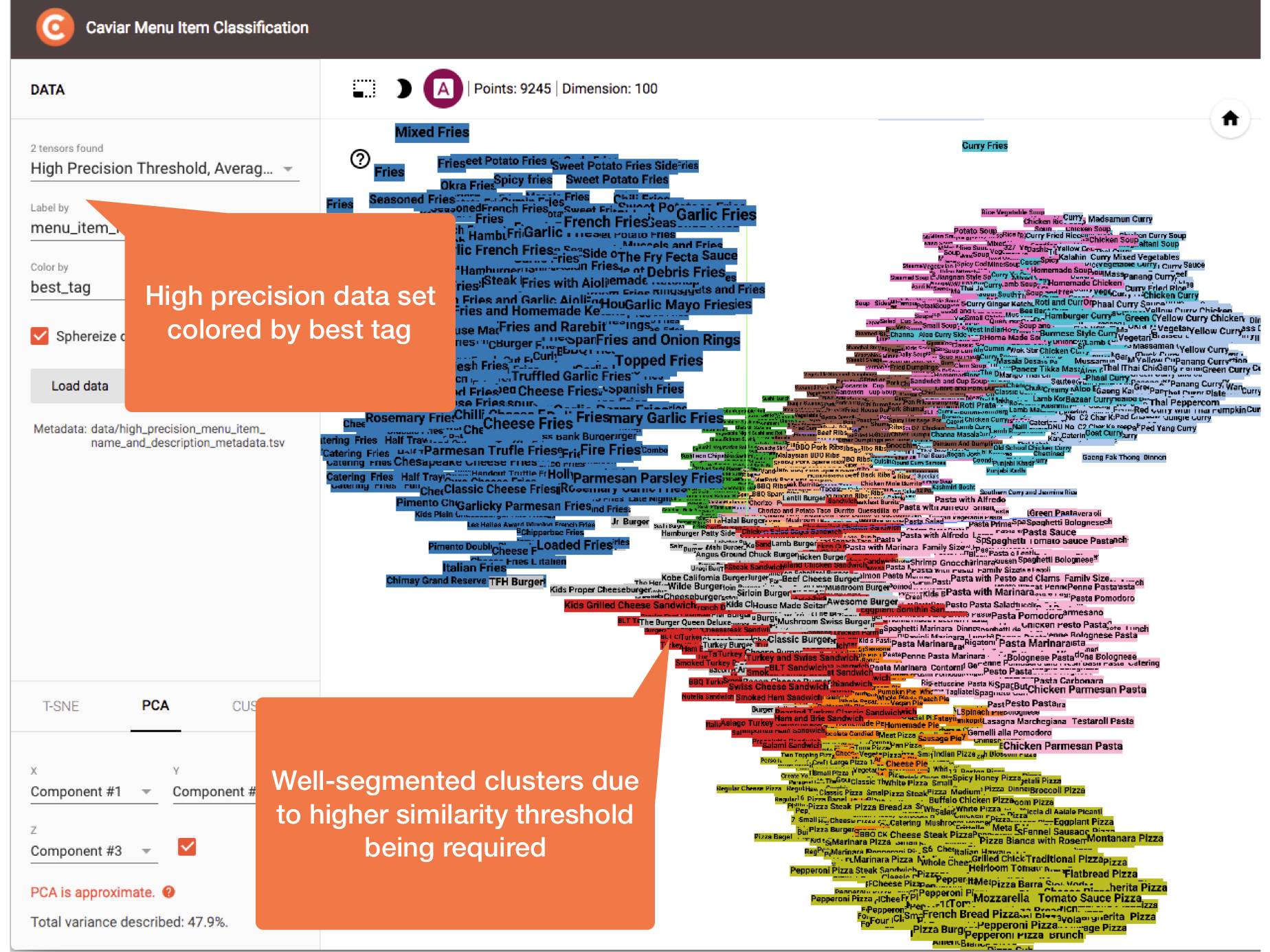

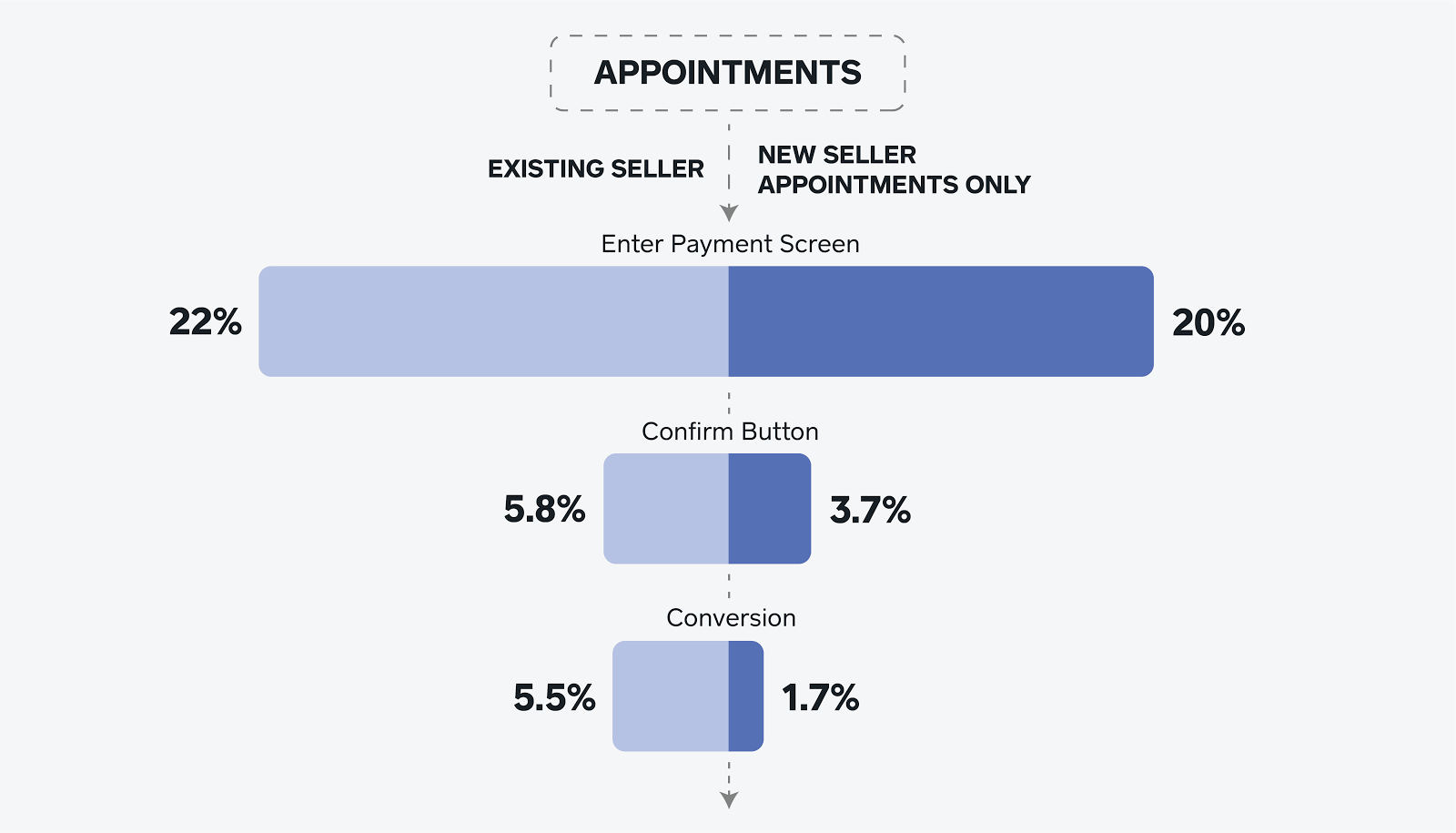

An org can use ML in different parts of product strategy. Some applications of ML are more strategic and involve applying statistical methods to uncover insights and make product- and corporate-level business recommendations. Examples include identifying bottlenecks in the customer acquisition funnel, or finding statistical clusters that may lead to better segmentation and targeting.

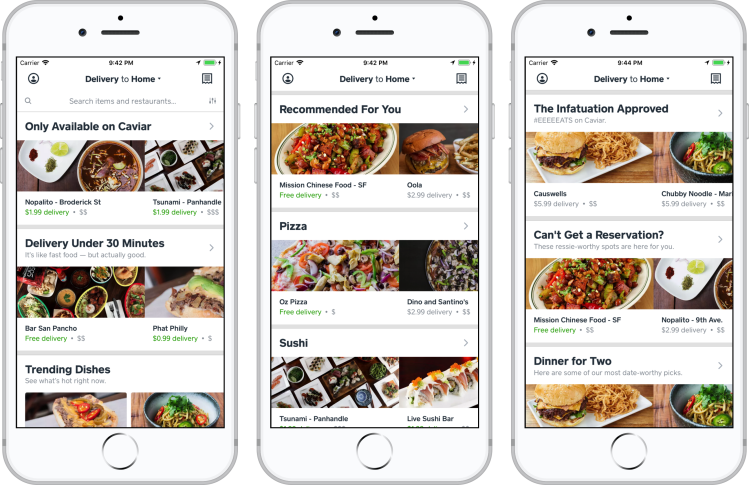

Other applications of ML are tactical in nature and involve using ML to automate or optimize some process. A few examples of this second category of ML applications are** **fraud detection, marketing campaign optimization, product recommendation, document translation, driving a car, and many others.

What these examples have in common is that they tend to involve automated decision-making. Automation allow you to do the same amount of work with fewer people, or free up people and other expensive assets to do more valuable projects. A supporter can answer the same issue again and again, but it’s even better if their attention is freed up so they have the capacity to notice emerging inquiry patterns, and share those customer insights with product managers to guide innovation and improve customer experience.

This remainder of the blog post focuses on the set of applications of ML within product strategy that correspond to optimization and automation.

Traditional automation and ML-based approach

It’s important to distinguish between automation and ML. The two are not strictly synonymous, though ML is supportive of automation. Automation can be achieved through identifying optimal business rules, but nowadays, ML is often a more accurate and more efficient way of doing intelligent automation. ML allows an organization make predictions directly from data and use those predictions to automate some sort of behavior.

How is an ML-based approach different from traditional automation?

Traditional automation: Business process management and rule-based systems. Rules are determined by business experts and analysts. Rules are static but can be revised.

Automation with machine learning: ‘Rules’ use optimized functions learned from data. ‘Rules’ are flexible and can adapt to new behavioral patterns.

Automation with ML still requires embedding the ML engine in some way into the decision engine. This can be thought of in two ways: ML acting within the rules engine, or ML as an input to the rules engine. Either way, building a sophisticated rules engine that uses ML to route decisions requires coordination among data scientists and engineers.

Model predictions: How to use the output?

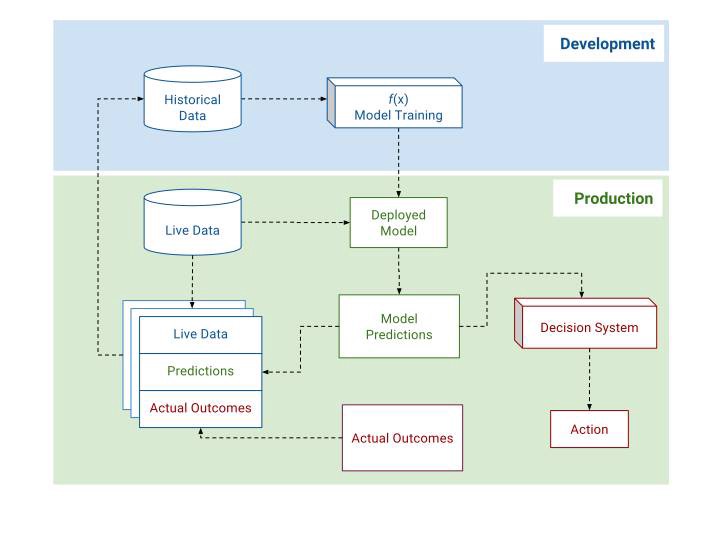

What needs to be built for production. In its mature state, this scoring/decision system will be able to automatically make a decision given a new observation by (1) taking in that observation’s metadata, (2) identifying the appropriate model and attributes of that observation to evaluate, and (3) applying decision thresholds against predefined evaluation criteria.

This usually takes the form of some call-out to a scoring API from the decision engine in production. Of course, this assumes the existence of and communication among several working systems, such as the decision engine, model scoring application, live data feed or queryable data store.

Sometimes, when managers talk about planning for ML, they focus on the steady-state, what the ML environment will look like in production. Since assumptions about the existence of data that’s necessary to train models are not always satisfied, the discussion about the steady-state often comes prematurely in product planning.

This isn’t to say that we shouldn’t think about these things, but laying out the roadmap to getting there is crucial. The first stage of planning for an ML-based solution is to ensure that the model development process is feasible. Here are some distinctions in the kind of language and topics related to production- vs. development-specific planning.

These distinctions between the way we tend to talk about production vs. development provides a segue into data acquisition and feedback loop. Most PMs know already that ML depends on the availability of data, but it’s also important to understand that how ML models are trained influences how we plan for future-state solutions. In order to train a model, you not only need an ability to ingest data live, but you also need a large set of observations called training data. This data used to calibrate and optimize the model to perform the function it is intended to do.

ML model life cycle

Model training: How does ML work?

ML is a way to generate predictions by using data (aka. observations, examples) to learn the optimal function that relates inputs to outputs. In operational applications, the learned function is used to produce a score that can then be used to automate decisions and/or processes. Here are some examples related to things we work on at Square.

Task: You want to automate a process by creating a function that yields some output for new input data.

-

Example: A function that calculates a risk score for a seller, customer, &c.

-

Example: A function that predicts if a seller will accept a marketing offer.

-

Example: A function that predicts if a seller will process a fraudulent chargeback.

-

Example: A function that predicts if a seller is high installment payment risk.

Solution: Use machine learning to generate that function!

However, just assuming that ML can be “thrown” at a problem to solve it is a misunderstanding of how ML works. There are some key assumptions that need to be satisfied before a problem can be approached using ML methods.

Assumptions:

-

You have data to learn from.

-

The data is “labeled” if it is a classification problem, or the outcome is known if it is a regression problem.

In simpler terms, the data contains the inputs and the outputs of the function you’re trying to approximate.

The assumption that you have labeled data is a big one. This is not always going to be the case. When planning for the incorporation of ML into a product, you need to know what the cost and time of data acquisition for the development work is going to be. Typically, there are tradeoffs between the cost/time of data acquisition and the quality/certainty of the data.

Data acquisition and storage

If you are starting to use ML from scratch, it’s very rare for there to be data that’s an exact match from the problem at hand. Some data assets are more directly relevant than others. However, an organization typically stores historical data for some purpose other than ML, and has a queryable backlog of events of different types with relevant associated metadata. This may be the ingredients for the relevant data If you are lucky.

In some cases, there’s no such internal data (at least yet). Here, it pays to know the the relative value and cost of internal and external data acquisition strategies. You need to estimate costs and benefits of integrating various internal & external data sets into your ML solution.

Usually, data storage and access systems are built with **some of the lower sophistication **use cases in mind, such as describing and diagnosing what has happened. When designing these systems, it’s important to plan for applications of ML down the road, which is concerned with predicting what will happen and what to do about it. This involves the design of what is collected (via the product) as much as it involves how to store it (design of the database). If you are not logging and storing potentially useful data now, it’s going to be a pain to implement ML later.

Therefore, data collection and storage is important. It is also important to start considering how that data will be stored efficiently and how it can be retrieved by model-hosting systems that require access to it when scoring. Related to these are concerns about the latency, fidelity, consistency, and immutability of the data.

Depending of the maturity of your product/organization in using ML you may or may not have the necessary data for training a model. There are 3 data basic states you can be in with regard to supervised learning models.

-

You’re optimizing an existing model — data probably exists

-

You’re building a model/product/feature from scratch — there’s no data

-

You’re iterating on something that exists, maybe making a spinoff. There’s some data that isn’t directly related, but you can use it to warm start your new product. In these cases, there is usually a way to infer performance from some proxy in the available data. Over time, the organization will be able to refine outcomes, labels, performance definitions to evaluate and improve calibration of the models.

Data planning roadmap

Let’s consider the most tricky case, when you have little or no data.

One way of generating data on the fly is actually to launch a product relatively blindly.

Since you need to generate positive and negative outcomes to create training data for future modeling, and no such data already exist, one way to gather information and start progress is to simply launch the product and observe outcomes. By doing this, you are in effect buying the information you need using trial and error. For example, lenders seeking to serve a new customer base needs to recalibrated their risk models for the new population. They may start by offering loans using common sense heuristics, and incur short term losses. They do this knowing that they are buying valuable information, and these losses will eventually be offset by the longer term gains of being the first to build up the relevant data to successfully serve this unknown market.

If you are in a small or no data situation, ask yourself the following:

-

Speed and volume of data acquisition. How much data do you have? Need? How long will it take to get it, how much will cost?

-

What is the value of data vs. cost of acquisition? In some cases a proxy for the data is cheaper and easier that gathering true labels/performance outcomes. The tradeoffs in these cases must be evaluated case by case.

-

How will we generate labels? What’s the feedback loop? Maybe a human reviews and tags observations, maybe the label is performance, such as conversion. An operations team is often a useful source of feedback.

Conclusion

One high value application of ML is the automation of routine processes. ML can enable automation by generating scores, which feed into rule-based decision management systems. However, the success of ML-based solution depends on having the right operational environment. It is crucial for decision makers to understand and plan for the production and development environments needed for your ML solution to function. It is hard to overstate the importance of the development environment, which begins with collecting, storing, and labeling data. Being a mature company with good data does not guarantee that you have the data needed to solve the problems or automate the processes at hand. The planning roadmap for data acquisition proposed here applies just as much to companies getting off the ground as it does to mature companies developing new products and entering new markets.