Comparing Two Forward Feature Selection Algorithms

Speed vs. customizability

I. Introduction

In this post, we review some things we learned from our first experiments on production feature selection applications. We focus on two variants of stepwise selection: (1) The linear stepwise selection method of Efroymson [2], herein known as *linear *forward stepwise, and (2) a custom logistic regression stepwise selection method using two passes through the data that we dub two-pass forward stepwise. Both methods rely on using a simple approach to iteratively select the most relevant features. Once the algorithms finish, the selected features were fed to more complex models, and we use cross-validation to choose how many to keep.

Both methods achieve reasonable performance on feature sets an order of magnitude smaller than the full set. The linear method is fast, and showed robust performance across a variety of evaluation metrics. The two-pass method is slower and somewhat brittle, but achieves exemplary performance on datasets even smaller than those picked by the other method. For both methods, performance usually continues to increase as you add more features.

II. Stepwise methods

A parent set of N features will always have 2^N possible feature subsets, a quantity that grows exponentially with N. This growth rate means that brute force search for an optimal subset at even modest N is rarely feasible [1]. This motivates finding fast, but approximate feature selection search strategies.

Both of the feature selection methods we consider are variants of the forward stepwise selection method. Traditional forward stepwise selection works as follows: We begin our feature selection process by choosing a model class (e.g., either linear or logistic regression). Next, we ask which of the N features on their own would provide the best model in our class for predicting our target. This entails building N separate models, each having access to only one of the features present in our original data set. Once the optimal first feature is identified, it is locked in place. We then iterate: Keeping the first feature selected in place, we ask which second feature, when added to our first, results in the best model having two features. This requires consideration of N-1 separate models. Continuing in this way, we can move through all N features, returning a ranking of the features in order of when they should be added to the retained feature set. Carrying out the full process requires N + (N-1) + (N-2) + … + 1 = N x (N + 1) / 2 model builds.

The actual run time of the above process depends on the model class chosen. If the run time for a single fit is O(N^g), where g is a constant specific to the runtime complexity of a given model type, then the run time of a full forward sweep would appear to be O(N^(2 + g)) — because we need O(N²) model builds, as noted above. This is true for the logistic regression approach (where g=3 [3]), which is disheartening because logistic regression is even faster to train than most of the models we would use in production. However, in the case of linear regression, an efficient method for carrying out stepwise forward selection exists that allows one to carry out the whole algorithm within the same time complexity of a single model build. This is done through efficient updating of the model as features are added to the feature set. See [2] for references and code relating to this approach, first discovered by Efroymson, which we refer to below as the ‘linear’ approach.

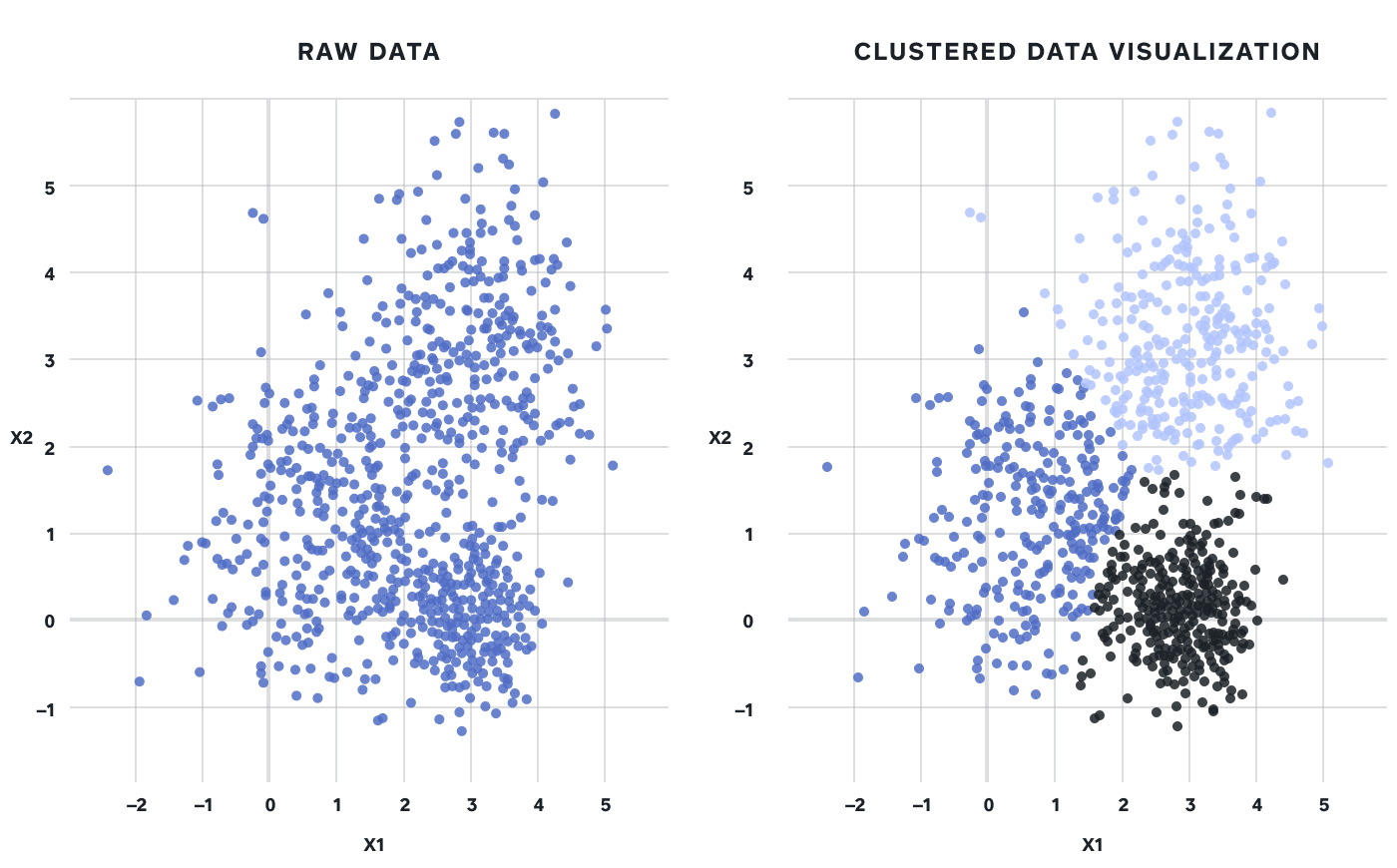

The second method we consider, the two-pass procedure, is also in the family of forward stepwise approaches, and was first devised by Ezzeri Esa (github), a Square data scientist. Instead of seeking to rank the entire feature set, it uses a greedy method that only requires 2N model fits.

The two-pass procedure begins by building a set of small univariate models (here, logistic regressions) on each of the individual features by themselves. It ranks the models according to a chosen metric in descending order. Starting by selecting the feature associated with the top-ranked univariate model, we proceed down the list, fitting models on the selected feature and the next-best candidate feature. When the metric improvement of the selected feature+candidate model exceeds a tunable threshold, the candidate is added to the set of selected features. From there, selection proceeds in a similar fashion, continuing down the list of candidates that have not yet been tried.

If a candidate model does not improve the metric by more than the threshold, it is not reconsidered. In this way, you can complete the selection process in only two passes through the data, one to rank the features to start and another to fit all the candidates as features are added to the selection pool. This allows ranking of features by both metric improvement, though this ranking may not be particularly meaningful for non-selected features, and by selection order, which is definitely not meaningful for non-selected features.

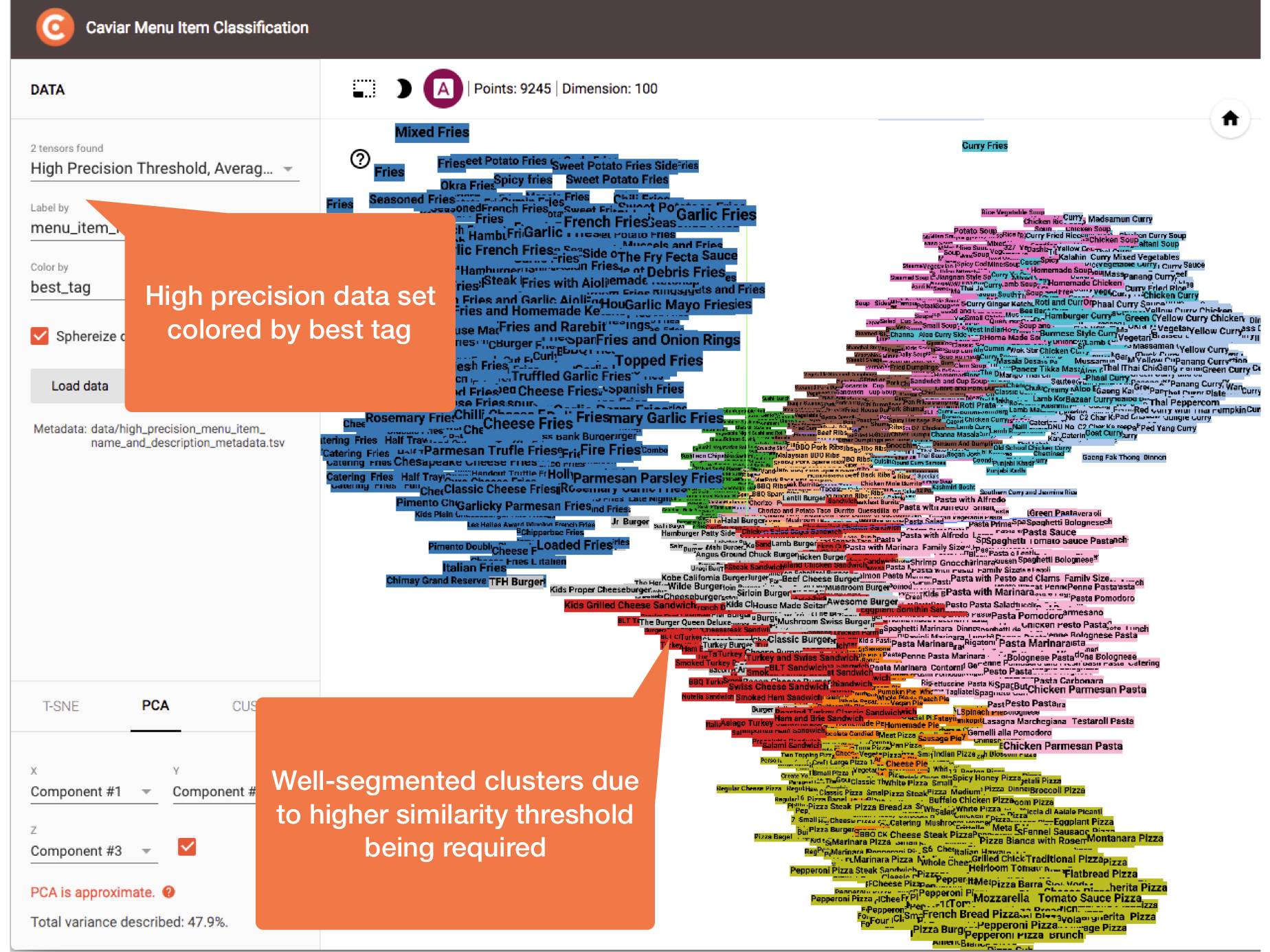

One advantage of the two-pass method is that we can select features to optimize a configurable metric. Being able to configure the metric is appealing because it means you can tailor feature selection to a particular business problem. For instance, in instances of fraud modeling where humans end up reviewing cases flagged by a model, we are often interested in the behavior of the classifier at or below a given precision level, as this allows us to estimate and calibrate caseload for our workforce. This motivates tuning based on minimum recall at or below a fixed precision level, which is not possible to do with linear selection (at least while still retaining Efroymson’s optimizations).

Unfortunately, with the tunable metric (and model form) of the two-pass method, we do not know of any speed-ups such as the one Efroymson discovered. So we have in the two-pass method an O(N^(1+g)) feature selection procedure. We have found in practice that it has unfavorable runtime when it includes more than a few hundred features or a few thousand observations, sometimes taking hours or days to run on one of our laptops. In contrast, the linear approach will often run in seconds on data sets of this size, and can scale to larger data sets as well.

Being able to tune to specific metrics and model types is a good reason to adopt the two-pass method, and time-tested quality and speed are good reasons to adopt the linear method. We set out to test the empirical performance of each on datasets of approximately 2000 available features, against a couple of different performance metrics.

III. Experiments

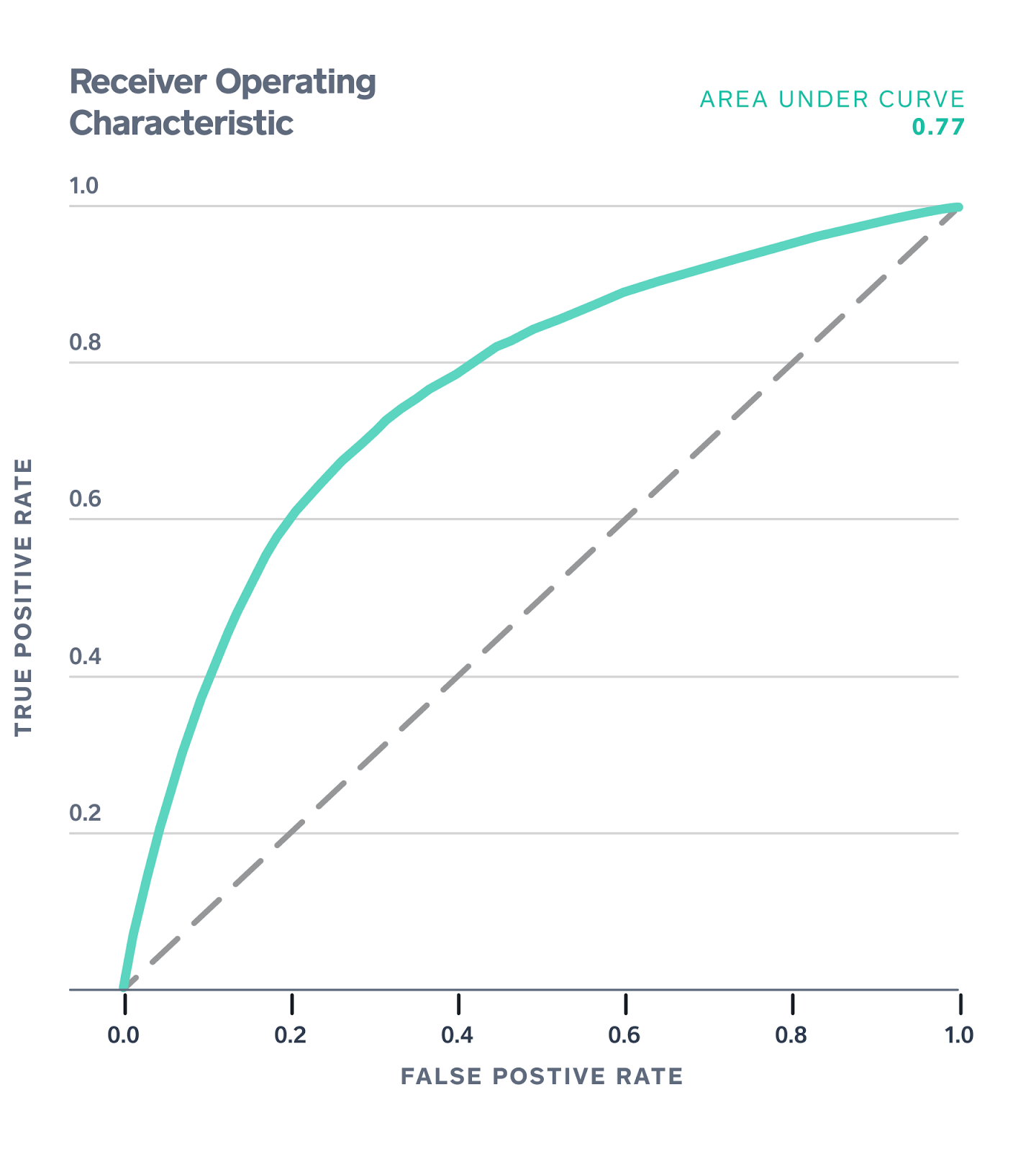

ROC AUC on validation set, top performing configurations.

ROC AUC on validation set, top performing configurations.

Within reasonable constraints, we expect the performance of a model to increase when informative features are added to it. So one measure of a feature selection technique is how much performance we can retain with smaller and smaller numbers of features. In the figure above, both methods find feature subsets that result in comparable performance to the full set, with an order of magnitude fewer features. The fast method maintains excellent performance at a much smaller number of features compared to the smallest performant subset found by the linear method.

To obtain these numbers, we took some data from one of our products and evaluated random forest and boosted tree classifiers on feature subsets of varying sizes as provided by Efroymson and our custom-tuned technique. For each feature subset, we conducted a grid search over number of trees and maximum tree depth to search for the best model on that feature set.

Once a “best” model for each model and available number of features had been identified, we tested each on an unseen holdout set and collected model performance metrics like ROC area-under-curve (AUC) and recall at fixed precision.

The linear method produces a full ranking over the whole feature set, so we trained models on 5, 10, 25, 50, 100, 500, 1000, and all the features — just over 2000. By contrast, the two-pass method does not provide an easy way to rank rejected features, so we took its output at several values of the threshold parameter, which ended up selecting between 20 and 60 features. We told the two-pass method to optimize ROC AUC as the selected metric.

The following figures show the distribution of evaluation metrics for all models fit using feature sets identified by the two selection methods. We show median performance at each feature set size, as well as 95% confidence bounds.

Test ROC AUC by model type, feature set size, and feature selection algorithm.

Test ROC AUC by model type, feature set size, and feature selection algorithm.

As expected, ROC AUC generally grows as the number of features grows. On very large numbers of features, random forests do not increase in performance, but gradient-boosted trees seem to indicate increases in AUC right up to the maximum number of features.

On very low numbers of features, the two-pass method outperforms linear selection. This advantage goes away when we look at performance on another metric, recall at precision=0.33:

Test recall at fixed precision by model type, feature set size, and feature selection algorithm.

Test recall at fixed precision by model type, feature set size, and feature selection algorithm.

On this metric, the trend for increasing performance given larger numbers of features persists, but there is no discernible difference between either method at any feature set size. We have found that, while two-pass selection generally outperforms linear on the metric for which it is tuned (here, ROC AUC), its performance is almost always more brittle on metrics for which it is not tuned.

This pattern is largely the same for F1, though models were very poorly differentiated on this metric, be it across model type, feature size or selection algorithm.

Test F1 by model type, feature set size, and feature selection algorithm.

Test F1 by model type, feature set size, and feature selection algorithm.

IV. Discussion

This comparison shows benefits and disadvantages of both linear and two-pass forward selection relative to each other. Linear selection is lightning-fast and yields robustly-performant subsets of features. If you’re looking for a very small subset of features, however, and you want it to excel on one metric in particular, the two-pass method is great, provided you have the time to wait for it to finish.

We have focused on model performance after feature selection rather than the business reasons one might pursue it, but we should contemplate when automatic feature selection (by any method) might or might not be worthwhile.

One scenario is to tune along a features-observations tradeoff. In a setting where compute or storage is constrained, we often find ourselves constrained between adding more observations and adding more features. Some of our experiments have made use of this tradeoff to eke more performance out of our models.

Another relevant use case is model interpretability. A linear model with a small number of (non-collinear) features has major advantages over many other machine learning approaches because it offers interpretability. For instance, if a risk model scores a seller on only three features — say, how long they’ve been on Square, how much payment volume they’ve processed, and how many chargebacks they’ve received — then when that model flags a merchant, it is easy to determine why it did so. We have performed some other experiments with much more general approaches to interpretability, but sparsely featurized linear models are still a gold standard in much of the industry.

References

[1] Optimal subset selection is NP-hard. See B. K. Natarajan. Sparse approximate solutions to linear systems. SIAM journal on computing, 24(2):227–234, 1995.

[2] M. Efroymson. Multiple regression analysis. Mathematical methods for digital computers, 1:191–203, 1960.

A recent python implementation of the Efroymson approach is available on GitHub here. Mathematical details — with a focus on extensions to unsupervised selection — are discussed in the arXiv paper linked there.

[3] Thesis on runtime complexity of some models here.