Combining Behavior and E-mail Content to Improve Customer Support

How Square Uses Deep Learning to Provide Exceptional Customer Service

Introduction

One of our primary objectives at Square is to provide exceptional customer experience, especially when the customers are entrusting the company with their financing. This is even more critical for us at Square Capital, the lending arm of Square, where our sellers are also borrowers. Our operations team is devoted to this goal and provides consistent, fast and tailored responses to seller inquiries regarding loan products. Every year, the team receives tens of thousands of emails from sellers regarding our products. In order to provide consistent responses to inquiries, operations agents (OA) manually classify emails into more than 20 different categories. For example, these include topics such as loan cost explanations, loan eligibility requirements and loan prepayment. OA then base their responses on the template email associated with the category. Over the years inquiry volume has increased in tandem with loan origination volume, prompting the need for a data science solution. The Square Capital Data Science team sought to alleviate some of the email volume by developing a deep learning model that surfaces topical information to the seller about their inquiry immediately after submission. To accomplish this, we partnered with Square content and legal teams to create easy-to-read articles corresponding to each category that contain similar information to the email the operations team would have sent as a reply. Upon submission of their inquiry, the sellers are presented with the relevant articles and may remove themselves from the servicing queue if the content solves their problem. Because written inquiries can typically be linked to multiple categories, we constructed our model to provide the top three most relevant articles relating to the inquiry.

Our solution

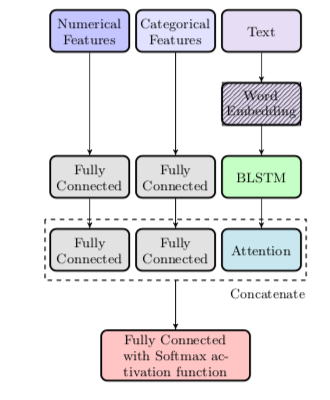

In this project we describe a robust prediction algorithm for the purpose of servicing seller inquiries by combining two distinct deep learning models into a hybrid model called the Attention Fusion Network model.

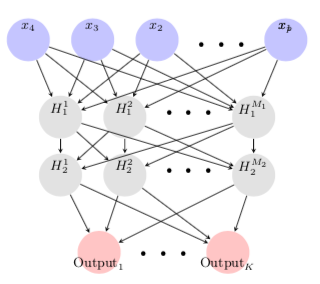

- The first model only uses the data related to seller interactions with Square products via a multilayer perceptron (MLP).

Representation of a 2-hidden layer Neural Network

Representation of a 2-hidden layer Neural Network

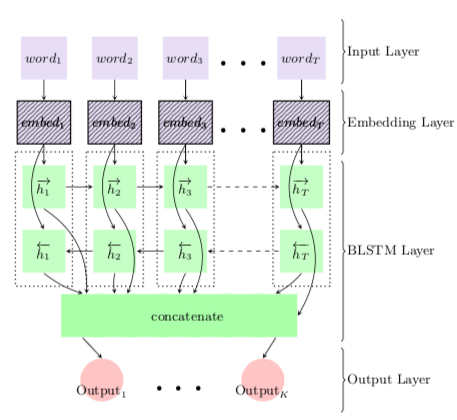

- The second focuses on the text contained in inbound email inquiries. To achieve this, we first transform each word into its vector representation using the FastText vocabulary look-up matrix and we encode the sequences of word embeddings into a sentence matrix. This is accomplished by feeding the sequences to a Bidirectional Recurrent Neural Network (RNN) made up of Long Short Term Memory modules (LSTM). We finally use an attention mechanism to summarize the encoded information and make the most important features more salient. This approach follows the Embed, Encode, Attend, Predict (EEAP) framework proposed by Matthew Honnibal.

Representation of a Bidirectional LSTM

Representation of a Bidirectional LSTM

The last hidden layers of the MLP model and of the Attention RNN model are concatenated and fed to a softmax activation function to compute the class probabilities.

Representation of the Attention Fusion Network model

Representation of the Attention Fusion Network model

Results

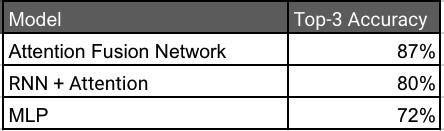

Overall, our solution outperforms both candidate models and shows that complex interactions between customer signals and inquiry context can be leveraged to inform better predictions.

Comparison of the models performances using Top-3 Accuracy

Comparison of the models performances using Top-3 Accuracy

Conclusion

One of the most interesting aspects of this work is uncovering the interaction between textual features and non-text features. In particular, upon combining the two sources, the overall predictive ability is improved substantially. The non-text signals help amplify critical aspects of the text that are impossible to learn without additional context.

This result suggests that in industrial applications of NLP where a vast amount of text data is not available (in terms of samples) or in cases where the underlying text data cannot predict the outcomes alone; available external signals can be leveraged to increase prediction performance on the task at hand. The Attention Fusion Network introduced here is one attempt to accomplish this goal, as well as to provide the fastest and most tailored response to our sellers.

You will find our paper at https://arxiv.org/pdf/1811.03169.pdf.