Success Metrics for Product Analytics

Metrics are not a replacement for strategy

Why do we use primary success metrics for product launches? Two reasons: (1) to align with the broader company goals (i.e. to make sure we're not rowing in different directions), and (2) to determine the effect of a product change relative to what we expected to happen. We want to test the hypothesis of customer behavior that we put forward, to see how theory meets practice in the wild. Product stakeholders also generally want agreed upon decision criteria beforehand to determine whether we roll out a product change or not. The reason we dig into secondary metrics is to get a fuller picture of what happened and to help safeguard against moving one metric at the expense of another (e.g. increasing conversion rate by decreasing our population denominator). We want a more complete story to learn about the impact of what we did, not to bypass the primary success metrics as a decision criteria.

One of the most important points to make here is setting expectations beforehand. We don't want to just bank on the brilliant and magnificent product update to lift revenue, we want to have a plan regardless of the outcome. If the results are very negative, typically we'll call the experiment early to reduce the damage; we were wrong and we want to learn fast. If they are very positive, then everyone is happy and we share it out and look good on every level. However, quite often we are in the big nebulous area of neutral results; we don’t observe a statistically significant difference between treatment and control groups, so the success metrics don’t tell us what to do. Neutral results are only a problem if there is no plan for what to do next.

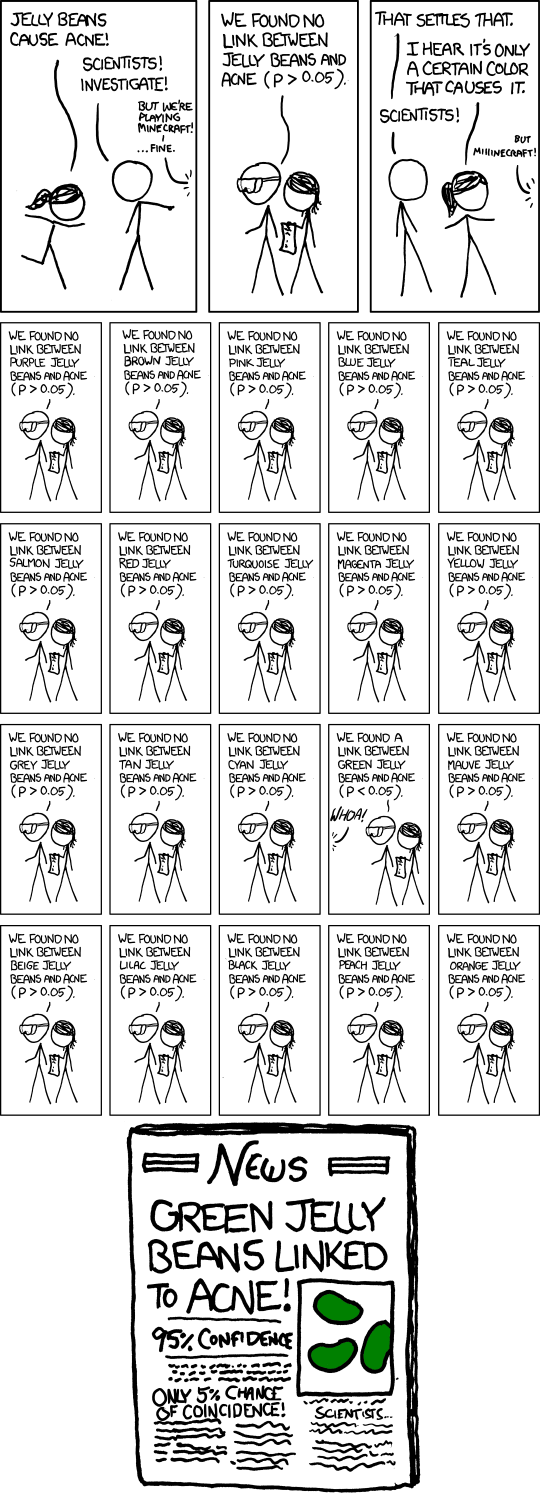

When experiment results are neutral, some common mistakes are (1) to run the experiment longer and wait for ‘statistical significance' to arrive, (2) to look elsewhere for results by digging deeply into different segments or secondary metrics until we find one that did reach statistical significance, or (3) any other forms of p-hacking (such as re-running the experiment). These approaches all put the pressure on metrics to make the decision. Success metrics are not a replacement for strategy, they are a way to confirm if the strategy was executed successfully. We also get to know how confident we are of these results, which we will get into later.

Unfortunately, A/B testing is often seen as simply gating for a product launch. This is where expectation setting from data scientists comes in: we want to communicate the different possible outcomes and align on decision making criteria prior to the start of an experiment to avoid biased decision making. Sometimes we still launch for neutral results because of other considerations such as unblocking a future product launch or a required back end change—there is a cost to the product work here, and it needs to be taken into consideration. But it is important to preface to stakeholders that neutral results are in themselves a fair answer from metrics. It just means the metrics don’t point clearly to one decision or the other; the p-value tells us the likelihood of seeing the observed difference between test and control groups if the true effect was zero. That's it. Unfortunately, metrics and statistical significance can often be over-extended in an attempt to overcome product strategy gaps and organizational challenges.

To take a step back, product data scientists are not only there to inform decisions making with data, but also to quantify risks. A product team could hire another engineer instead of a product data scientist if they want to build more things, but they may lose some in-depth insights into the product that data scientists can provide, and potentially risk weakening their understanding of customer behavior / the impact of their work over time. Product managers (PMs) often need to balance risk and reward in their decisions—data scientists want to make sure they are not flying blind. Arguably, the most important outcomes to understand in an experiment are the 'definite yes' and 'definite no' since those are the ones that really move our metrics. However, we also want to avoid launching ineffective design into production. This is part of the value data science and product analytics can collectively provide.

Product data scientists (at least when embedded within product teams like at Square) are tasked with adding value to a product space with data. Metrics can give us some neutrality to look more broadly at impact to the average customer and confirm whether an observed outcome aligns with our expectations. We have to be honest for the sake of long term success, which can often come from short term hard truths about mediocre results.

"But the essential difficulty in creating strategy is not logical; it is choice itself. Strategy does not eliminate scarcity and its consequences―the necessity of choice. Strategy is scarcity's child and to have a strategy, rather than vague aspirations, is to choose one path and eschew others. There is difficult psychological, political, and organizational work in saying 'no' to a whole world of hopes, dreams, and aspirations. When a strategy works, we tend to remember what was accomplished, not the possibilities that were painfully set aside."

- Richard Rumelt, Good Strategy Bad Strategy

The basic question for after an experiment from a stakeholder is: 'did it go up or go down?' This can help give us directional insight, but doesn't tell us how confident we are in those results, i.e. how big a risk we’d take by rolling out the change to all users. Maybe the chosen metric went down for the randomized treatment group, but it was by such a small amount that we think it was just statistical noise, and vice versa if it went up. So what we really want to know is: 'how confident are we that these results are due to the changes we made?'

Data scientists often use chi-squared tests or Z-tests for conversion, and t-tests to determine differences between groups for continuous variables such as revenue, assuming a nearly normal distribution. A lot has been written about them from many fields, so I won't go into much detail here. If the normal distribution assumption is violated, permutation testing can help by randomly sampling between groups to see outcome differences with or without outliers. But our root issue is that when statistics don’t reveal an obvious solution then that is our answer. P-values quantify the likelihood of observing a result as or more extreme than the one we did if the true impact of the test is zero. In other words, there is insufficient evidence in the data to reject the null hypothesis, so what this means for non-technical stakeholders is that all our statistical techniques and analysis are telling us that 'we don't know'. And even if we DO know, and hit that sweet <0.05 p-value, setting our confidence level at 95% means that we’re willing to call an experiment a win (or loss) when it was really neutral 1 in every 20 times. We could have a higher threshold, like 1 in 100 (p-value <0.01), but then we'll be 'neutral' a lot more often OR we'll have to run our experiments a lot longer (which is costly).

The real value of A/B testing for product launches is having a check against our intuition—it's remembering that we have a hypothesis to test, not a certainty to unfold. If a product team did not have a data scientist or analyst, they might essentially be basing product decisions on their understanding of customers and product spaces, which doesn't scale very well and can result in a lot of work that doesn't move the needle over time. Granted, there needs to be a balance of product intuition, customer empathy, and quantitative analysis to improve customer experiences.

"...statistics isn't about data, but distillation, rigor, and avoiding being fooled by randomness."

- Nassim Nicholas Taleb, Skin in the Game.

Analytics and A/B testing is a hedge against risk; it strengthens our decision-making and creates a shield against randomness and an environment for deeper learning. Besides the clear value of giving us a strong yes and strong no, experimentation also tells us when we just don't know. It tells us the tradeoffs and the likelihood of our outcomes being random, i.e. how much we don't know. But success metrics do not replace strategy or organizational challenges, they just shore up confidence in our decisions and give us a sense of the impact of our work. Knowing the limits makes communicating expectations much clearer and avoids people throwing the whole results out, or running an experiment longer and longer in the hope of hitting some target that will avoid a sunk cost fallacy. Sometimes all we can do is save time, and avoid pretending we know something when we don't.

An often overlooked area of importance, especially within the context of consulting product teams and establishing success metrics, is effective communication. This involves important soft skills like establishing rapport and shared language with stakeholders, giving honest feedback with psychological safety, setting up expectations beforehand that our hypothesis could be completely wrong—or arguably worse—unclear, in order to flesh out our strategy. Especially if new to a product team, a data scientist needs to be able to communicate when we don't know an effect was not random, and communicate the limits of what we do know, which while disappointing and frustrating at times, helps prevent worse outcomes in the long run. If we find out through many, creative, and clever experiments that we cannot move the needle in the spaces we want, it means it's time to focus our efforts elsewhere, and invest our time in a more fruitful area as a company.

We are hiring at Square for Data Scientists!