Ruby’s New JIT

Ruby is about to get Way Faster

CRuby just got a JIT!

There have been many attempts at implementing a JIT for CRuby, the reference implementation of Ruby. None have been merged, until now.

,---. ,---. .-./`) .-./`) ,---------.

. ' , | \ / | \ '_ .')\ .-.')\ \

_________ | , \/ , | (_ (_) _)/ `-' \ `--. ,---'

/_\/_ _ \/_\ | |\_ /| | / . \ `-'`"` | \

\ \ / / | _( )_/ | | ___ | '`| .---. : :

, \\ // . | (_ J _) | || | | ' | | | |

\/ | (_,_) | || `-' / | | | |

, . | | | | \ / | | | |

'--' '--' `-..-' '---' '---'

TL;DR: Ruby 2.6 will have an optional --jit flag that will increase startup time and take more memory in exchange for blazing speed once warmed up.

Previous Ruby JIT Attempts

Some previous attempts at a JIT for Ruby, like rujit, have been successful at speeding up Ruby at the cost of excessive memory usage. Other attempts, like OMR + Ruby, use an existing JIT library such as Eclipse OMR. Another example, llrb, uses the JIT library that ships with LLVM. The biggest perceived problem with these implementations is that JIT libraries are moving targets, and tie Ruby’s survival to the unknown future of these underlying JIT projects.

A Huge Leap Forward: RTL MJIT

Vladimir Makarov is no stranger to Ruby performance. His reimplementation of hash tables in Ruby 2.4 sped up hashes considerably.

In 2017, Makarov undertook a major new project, called RTL MJIT, rewriting the way Ruby’s intermediate representation (IR) works and adding a JIT at the same time. In this incredibly ambitious project, the existing YARV instructions were replaced entirely with a brand-new instruction set in Register Transfer Language (RTL). Makarov also created a JIT called MJIT, which produces C code from the RTL instructions, and then compiles that C code onto native code with existing compilers.

The problem with Makarov’s implementation is that the brand new RTL instructions meant a major rewrite of Ruby’s internals. It could take years to polish this work to a point where it’s stable and ready to be merged into Ruby. Ruby 3 may even ship with the new RTL instructions, but hasn’t yet been decided (and would be years away).

The JIT Merged into Ruby: YARV MJIT

Takashi Kokubun is no stranger to JITs and Ruby performance either. He was the author of the llrb JIT, and sped up Ruby’s ERB and RDoc generation by several times in Ruby 2.5.

Kokubun took Makarov’s work on RTL MJIT and extracted the JIT portion while maintaining Ruby’s existing YARV bytecode. He also pared down MJIT to a minimal form, without advanced optimizations, so it could be introduced without major disruption to other parts of Ruby.

__ __ _ ___ _____

| \/ | _ | | |_ _| |_ _|

| |\/| | | || | | | | |

|_|__|_| _____ _\__/ _____ |___| _____ _|_|_

_|"""""|_|"""""|_|"""""|_|"""""|_|"""""|_|"""""|_|"""""|[倭](https://en.wiktionary.org/wiki/%E5%80%AD)

"`-0-0-'"`-0-0-'"`-0-0-'"`-0-0-'"`-0-0-'"`-0-0-'"`-0-0-'

Kokubun’s work was merged into Ruby and will be released with Ruby 2.6 on Christmas 2018. If you’d like to try out the JIT now, you can do so with Ruby’s nightly builds. The performance improvements are fairly modest at the moment, but there’s plenty of time to add optimizations before Ruby 2.6 is released. Kokubun’s strategy for safe, gradual improvement worked. Ruby has a JIT!

How it Works

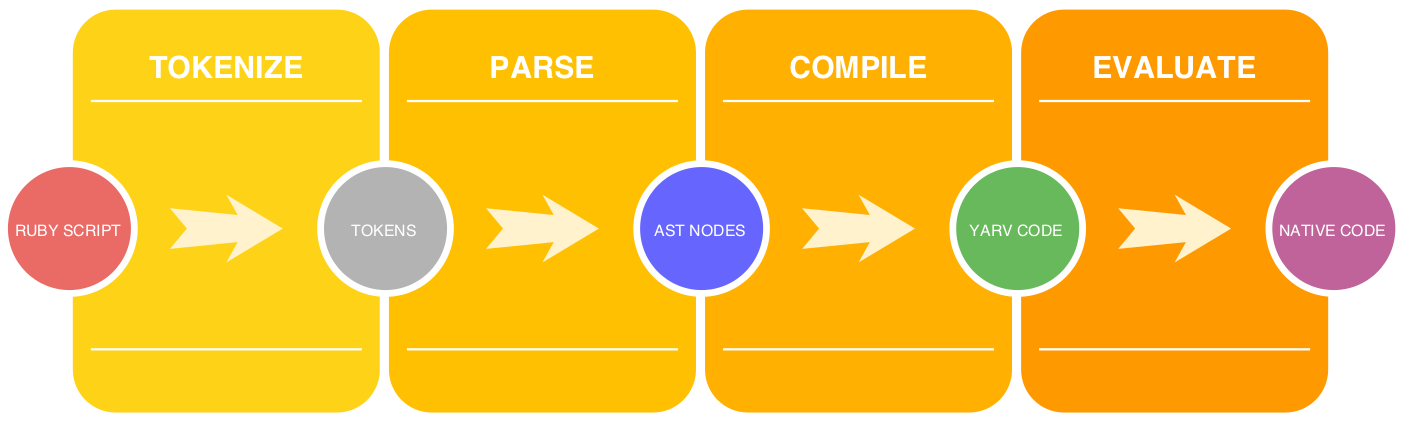

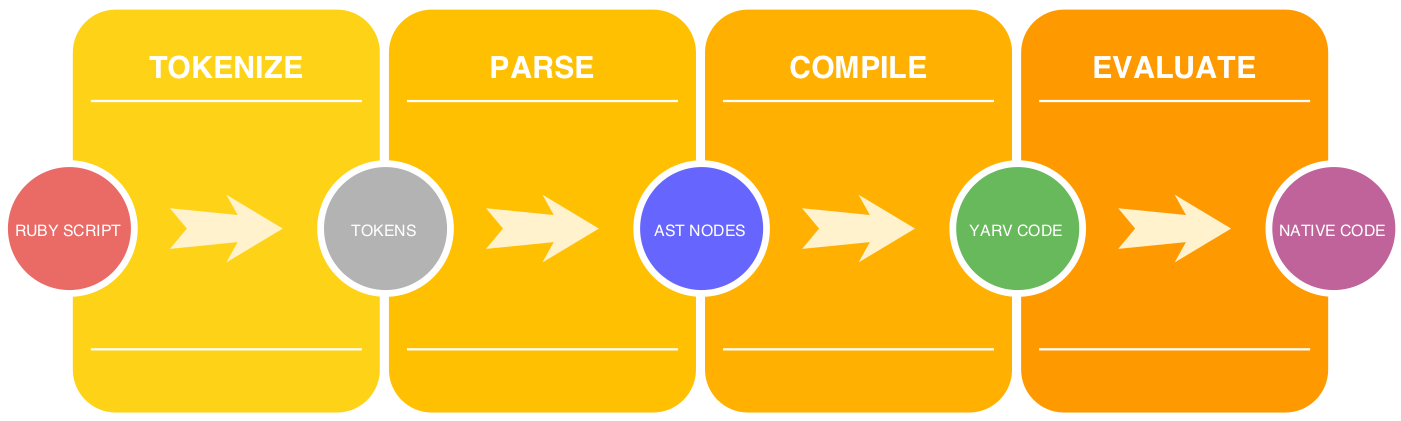

Getting to YARV Instructions

Ruby has to go through a few steps in order to run your code. First, the code is tokenized, parsed and compiled into YARV instructions. This part of the process comprises about 30% of the total time a Ruby program takes.

We can see each of these steps using RubyVM::InstructionSequence and Ripper from the stdlib.

require 'ripper'

##

# Ruby Code

code = '3 + 3'

##

# Tokens

Ripper.tokenize code

#=> ["3", " ", "+", " ", "3"]

##

# S-Expression

Ripper.sexp code

#=> [:program, [[:binary, [:@int, "3", [1, 0]], :+, [:@int, "3", [1, 4]]]]]

##

# YARV Instructions

puts RubyVM::InstructionSequence.compile(code).disasm

#>> == disasm: #<ISeq:<compiled>@<compiled>:1 (1,0)-(1,5)>==================

#>> 0000 putobject 3 ( 1)[Li]

#>> 0002 putobject 3

#>> 0004 opt_plus <callinfo!mid:+, argc:1, ARGS_SIMPLE>, <callcache>

#>> 0007 leave

##

# YARV Bytecode

RubyVM::InstructionSequence.compile(code).to_binary

#=> "YARB\x02\x00\x00\x00\x06\x00\x00\x003\x02\x00\x00\x00\x00\x00\x00\x01\x00\x00\x00\x02\x00\x00\x00\x05\x00\x00\x00\xA4\x01\x00\x00\xA8\x01\x00\x00\x1F\x02\x00\x00x86_64-darwin17\x00\x12\x00\x00\x00\x00\x00\x00\x00\x03\x00\x00\x00\x00\x00\x00\x00\x12\x00\x00\x00\x00\x00\x00\x00\x03\x00\x00\x00\x00\x00\x00\x00C\x00\x00\x00\x00\x00\x00\x00\x00\x00\x00\x00\x00\x00\x00\x00\x00\x00\x00\x00\x00\x00\x00\x008\x00\x00\x00\x00\x00\x00\x00\x01\x00\x00\x00\x01\x00\x00\x00\x01\x00\x00\x00\x00\x00\x00\x00\x00\x00\x00\x00\x02\x00\x00\x00\x01\x00\x00\x00\x00\x00\x00\x00\x10\x00\x00\x00\x01\x00\x00\x00\x00\x00\x00\x00\b\x00\x00\x00<\x00\x00\x00\x00\x00\x00\x00\x00\x00\x00\x00\x00\x00\x00\x00\x00\x00\x00\x00\x00\x00\x00\x00\x00\x00\x00\x00\x00\x00\x00\x00\x00\x00\x00\x00\x00\x00\x00\x00\x00\x00\x00\x00\x00\x00\x00\x00\x00\x00\x00\x00\x00\x00\x00\x00\x01\x00\x00\x00\x00\x00\x00\x00\x02\x00\x00\x00\x00\x00\x00\x00\x02\x00\x00\x00\x00\x00\x00\x00\x03\x00\x00\x00\x00\x00\x00\x00\x01\x00\x00\x00\x00\x00\x00\x00\x01\x00\x00\x00\x05\x00\x00\x00|\x00\x00\x00\x00\x00\x00\x00\x8C\x00\x00\x00\x00\x00\x00\x00\x02\x00\x00\x00\x00\x00\x00\x00P8\xD3\x0E\x01\x00\x00\x00\x94\x00\x00\x00\x00\x00\x00\x00\x00\x00\x00\x00\x00\x00\x00\x00\xFF\xFF\xFF\xFF\xFF\xFF\xFF\xFF\x00\x00\x00\x00\x00\x00\x00\x00\x00\x00\x00\x00\x00\x00\x00\x00\x94\x00\x00\x00\x00\x00\x00\x00\x00\x00\x00\x00\x00\x00\x00\x00\x00\x00\x00\x00\x00\x00\x00\x00\x00\x00\x00\x00\x00\x00\x00\x00\x01\x00\x00\x00\x00\x00\x00\x00\x02\x00\x00\x00\x00\x00\x00\x00\x00\x00\x00\x00\x00\x00\x00\x00\x00\x00\x00\x00\x00\x00\x00\x00\x00\x00\x00\x00\x00\x00\x00\x00\xA4\x00\x00\x00\x00\x00\x00\x00\x00\x00\x00\x00\x04\x00\x00\x00\x00\x00\x00\x00\xF1\x00\x00\x00\b\x00\x00\x00\x00\x00\x00\x00G\x00\x00\x00\x02\x00\x00\x00\x00\x00\x00\x00\x02\x00\x00\x00\x00\x00\x00\x00\x00\x00\x00\x00\x00\x00\x00\x00E\x00\x00\x00\x02\x00\x00\x00\x00\x00\x00\x00\n\x00\x00\x00\x00\x00\x00\x00<compiled>\xF5\x00\x00\x00\a\x00\x00\x00\x00\x00\x00\x00E\x00\x00\x00\x02\x00\x00\x00\x00\x00\x00\x00\x01\x00\x00\x00\x00\x00\x00\x00+\xB8\x01\x00\x00\xC4\x01\x00\x00\xE0\x01\x00\x00\xFE\x01\x00\x00\n\x02\x00\x00"

Projects like yomikomu and bootsnap show how you can speed up Ruby by caching the YARV instructions to disk. In doing so, the instructions don’t have to be parsed and compiled to YARV instructions after the first time the Ruby script is run, unless there are changes to the code. This doesn’t speed up Ruby on the first run, but subsequent executions without code changes will be about 30% faster—because they can skip parsing and compiling to YARV instructions.

This strategy of caching the compiled YARV instructions actually has nothing to do with the JIT, but it’s a strategy that’s going to be used in Rails 5.2 (via bootsnap) and likely in future versions of Ruby, too. The JIT only comes into play after YARV instructions exist.

JIT Compiling the YARV Instructions

Once the YARV instructions exist, it’s the RubyVM’s responsibility at runtime to turn those instructions into native code appropriate for the operating system and CPU that you’re using. This process consumes about 70% of the time it takes to run a Ruby program, so it’s the bulk of the runtime.

This is where the JIT comes into play. Instead of evaluating the YARV instructions each time they’re encountered, certain calls can be turned into native code, so when the RubyVM sees it a second or subsequent time, the native code can be used directly.

“This is an ERB template that generates Ruby code that generates C code that generates JIT-ed C code.” ~mjit_compile.inc.erb

With MJIT, certain Ruby YARV instructions are converted to C code and put into a .c file, which is compiled by GCC or Clang into a .so dynamic library file. The RubyVM can then use that cached, precompiled native code from the dynamic library the next time the RubyVM sees that same YARV instruction.

Deoptimization

However, Ruby is a dynamic language, and even core class methods can be redefined at runtime. There needs to be some mechanism to check if the call that is cached in native code has been redefined. If the call has been redefined, the cache needs to be flushed and the instruction needs to be interpreted as if there were no JIT. This process of falling back to evaluating the instruction when something changes is called deoptimization.

##

# YARV instructions for `3 + 3`:

RubyVM::InstructionSequence.compile('3 + 3').to_a.last

#=> [1,

:RUBY_EVENT_LINE,

[:putobject, 3],

[:putobject, 3],

[:opt_plus, {:mid=>:+, :flag=>16, :orig_argc=>1}, false],

[:leave]]

##

# MJIT C code created from the `:opt_plus` instruction above:

VALUE opt_plus(a, b) {

if (not_redefined(int_plus)) {

return a + b;

} else {

return vm_exec();

}

}

Note, in the example above, that the C code MJIT produces will deoptimize and re-evaluate the instruction if the call is redefined. This takes advantage of the fact that most of the time we’re not redefining addition, so we get to use the compiled, native code with the JIT. Each time the C code is evaluated, it will make sure that the operation it’s optimizing hasn’t changed. If there’s a change, it’s deoptimized and the instruction is re-evaluated by the RubyVM.

Using the JIT

You can use the JIT by adding the --jit flag.

`$ ruby --jit -e "puts RubyVM::MJIT.enabled?"

true`

There are also a number of experimental JIT-related flag options:

MJIT options (experimental):

--jit-warnings Enable printing MJIT warnings

--jit-debug Enable MJIT debugging (very slow)

--jit-wait Wait until JIT compilation is finished everytime (for testing)

--jit-save-temps

Save MJIT temporary files in $TMP or /tmp (for testing)

--jit-verbose=num

Print MJIT logs of level num or less to stderr (default: 0)

--jit-max-cache=num

Max number of methods to be JIT-ed in a cache (default: 1000)

--jit-min-calls=num

Number of calls to trigger JIT (for testing, default: 5)

You can try the JIT interactively in IRB.

`$ ruby --jit -S irb`

irb(main):001:0> RubyVM::MJIT.enabled?

=> true

It seems to be a bit buggy in these early days, but the JIT also works with Pry.

$ ruby --jit -S pry

pry(main)> RubyVM::MJIT.enabled?

=> true

Startup Time

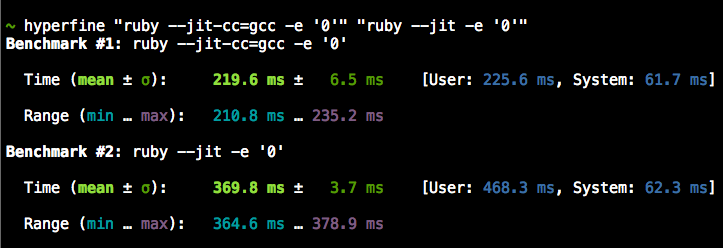

Startup time is one thing to take into consideration when considering using the new JIT. Starting up Ruby with the JIT takes about six times (6x) longer.

Whether you use GCC or Clang will also affect the startup time. At the moment, GCC is considerably faster than Clang, but it’s still more than three times (3x) slower than Ruby without the JIT.

Note: The --jit-cc flag may have to be removed for compatibility with the precompiled MJIT header.

Note: The --jit-cc flag may have to be removed for compatibility with the precompiled MJIT header.

As such, you probably won’t want to use the JIT with very short-lived programs. Not only does the JIT need to start up, but to be effective, it also needs time to warm up. Long-running programs are where the JIT will shine — where it can warm up and get a chance to use its cached native code.

Performance

In 2015, Matz announced the 3x3 initiate to make Ruby 3.0 three times (3x) faster than Ruby 2.0. The official benchmark for Ruby 3x3 is optcarrot, a working Nintendo emulator written in pure Ruby.

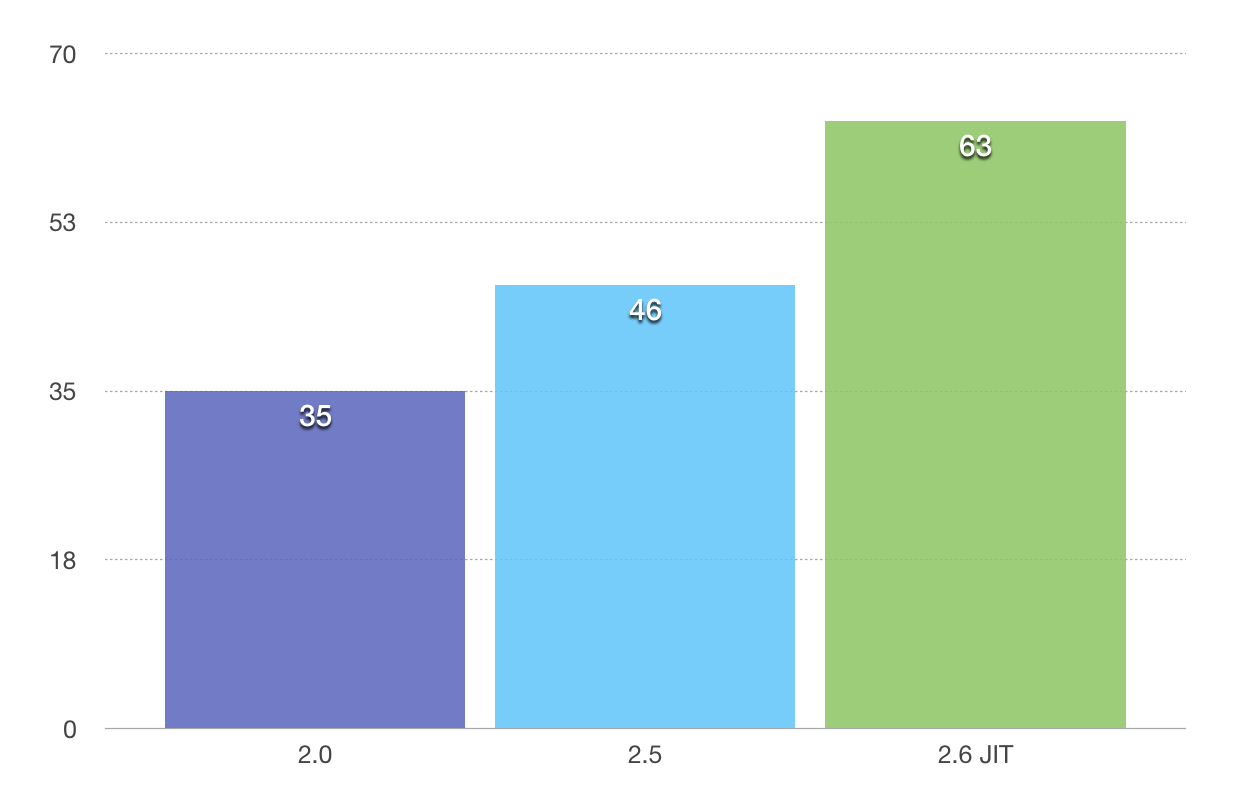

The actual Nintendo runs at 60 FPS. Kokubun’s benchmarks of optcarrot on a 4.0GHz i7–4790K with 8 cores show Ruby 2.0 at 35 FPS. Ruby 2.5 is a 30% improvement at 46 FPS. With the JIT enabled, Ruby 2.6 is 80% faster that Ruby 2.0 at 63 FPS. This is the carrot part of optcarrot (optimization carrot), a playable NES game at 60+ FPS.

optcarrot FPS comparison

optcarrot FPS comparison

That’s a big improvement! Adding a JIT to Ruby has already moved Ruby 2.6 well towards the 3x3 initiative goal. The initial JIT improvements are fairly modest, since MJIT was introduced without many of the optimizations found in the original RTL MJIT. Even without these optimizations, there is notable performance improvement. As additional optimizations are added, these improvements will become more profound.

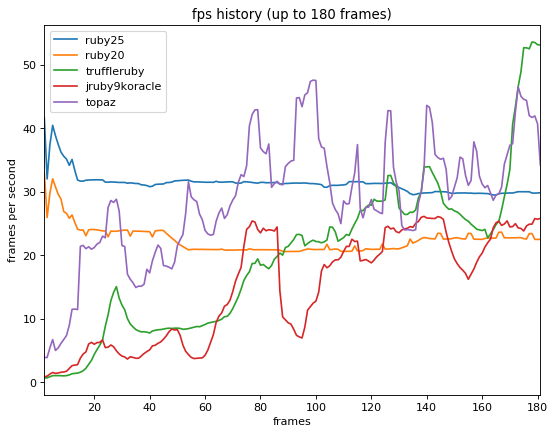

The benchmark below shows optcarrot performance for the first 180 video frames across a variety of Ruby implementations. Ruby 2.0 and 2.5 show a fairly flat performance. TruffleRuby, JRuby and Topaz are Ruby implementations that already have a JIT. You can see that the implementations with a JIT (the green, red and purple lines below) have a slow startup and then take a few frames to start warming up.

Image by Yusuke Endoh, distributed under MIT license.

Image by Yusuke Endoh, distributed under MIT license.

After warming up, TruffleRuby pulls well ahead of the pack with its highly optimized GraalVM JIT. (See also TruffleRuby with SubstrateVM, which significantly improves startup time with only slightly less speed once warmed up.)

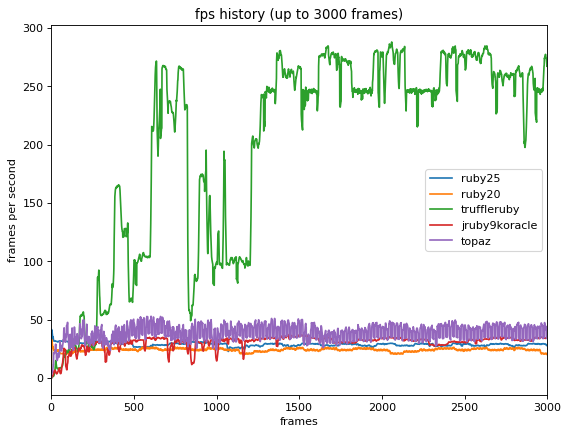

Image by Yusuke Endoh, distributed under MIT license.

Image by Yusuke Endoh, distributed under MIT license.

The official optcarrot benchmarks don’t yet include Ruby 2.6-dev with the JIT enabled, but it won’t be able to compete with TruffleRuby at this point. TruffleRuby is well ahead of the rest of the pack, but not yet ready for production.

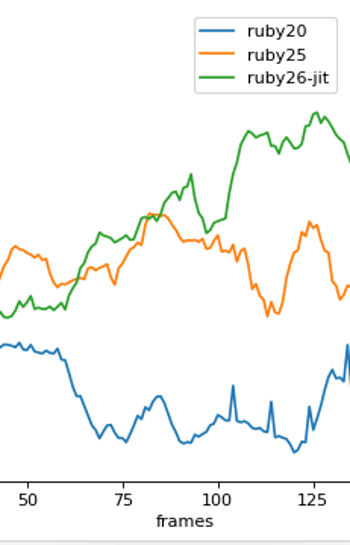

Modifying optcarrot benchmarks to show Ruby 2.6-dev with the GCC-based JIT enabled, we can see that it take a few frames to warm up. After warming up the JIT, even with most optimizations current not enabled, it pulls ahead. Note the green line starting off slow but then ramping up and staying ahead thereafter.

If we zoom in, we can see that Ruby 2.6-dev with the GCC JIT hits the point of inflection where it pulls ahead of Ruby 2.5 after about 80 frames — just a couple seconds into the benchmark.

If your Ruby program is short-lived, and exits after only a few seconds, then you probably won’t want to enable the new JIT. If your program is longer-running, and you have a bit of memory to spare, the JIT may bring substantial performance benefits.

The Future

Here at Square we use Ruby broadly internally, and we maintain dozens of open source Ruby projects, including the Square Connect Ruby SDKs. So for us, CRuby’s new JIT is exciting. There are still lots of kinks to work out, and many low-hanging optimizations to take advantage of before its release this Christmas. Please try out the JIT now on Ruby trunk or the nightly snapshot, and report any issues you encounter.

Vladimir Makarov and Takashi Kokubun deserve a tremendous amount of credit for moving Ruby forward with the addition of a JIT that will continue to bring new speed improvements for years to come!

Want more? Signup for our monthly developer newsletter.