Operating Vitess

Ripping Vitess apart and putting it back together.

It has been quite the challenge bringing Vitess online over our existing MySQL database, then sharding and operating it at greater scale over time. Individuals love using the Cash App, and while that is a great thing, being unprepared for peak load gives both new and existing users a poor experience. When we started sharding, an iconic line The Wire’s Senator Clay Davis came to mind whenever I was updated on our incremental growth. We had already optimized the common code paths and removed all the inefficiencies that we could. We were running on expensive hardware and borrowed time. We needed a scalable solution, urgently.

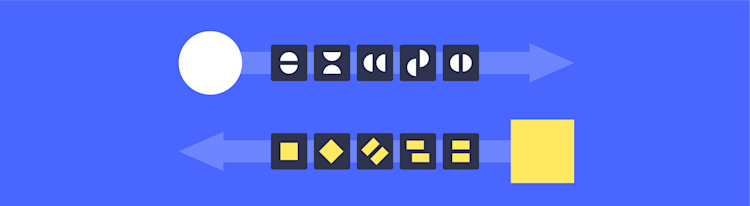

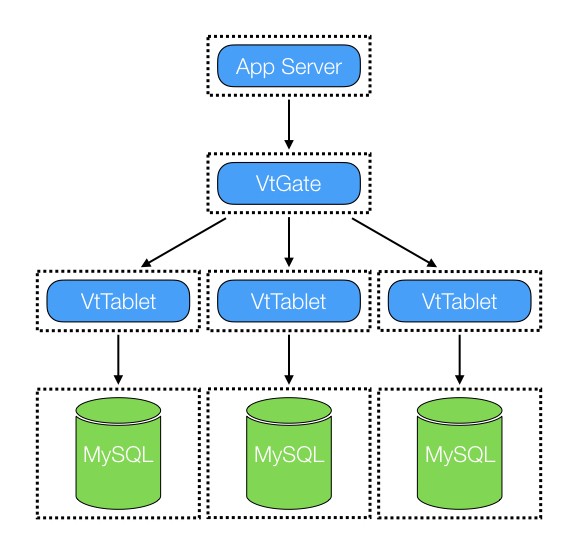

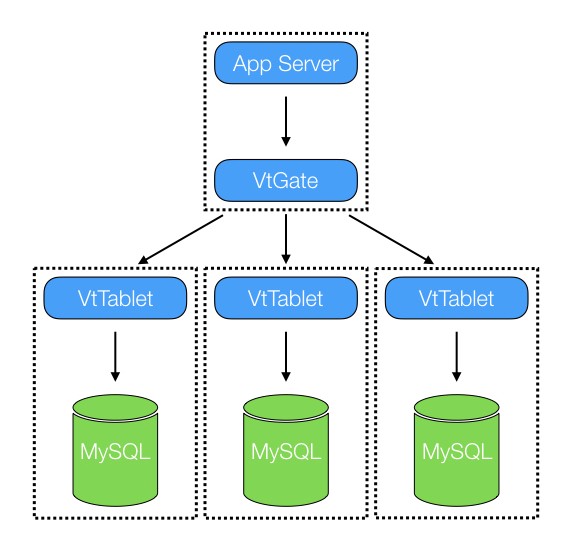

There are two Vitess components, the VtTablet and the VtGate, that are involved in pretty much every Vitess persistence request. The VtTablet is a proxy that runs in front of each MySQL allowing Vitess to pool connections and protect the database in several ways, such as mandating maximum query time and size. The VtTablet is meant to be 1:1 with the MySQL database. It is managing and is normally hosted on the same host as the MySQL. It connects to that MySQL database using either a local or TCP socket. The VtGate is the query router. It monitors the current state of the Vitess cluster, figuring out what queries should go to what databases, rewriting queries and aggregating data as required. The VtGate is the app’s entry point to Vitess. Normally your app connects to any of a fleet of VtGates using either the MySQL protocol or a Vitess driver.

Back when we first started using Vitess we were very concerned with what resources VtTablet might use. VtTablet could potentially starve resources needed for the MySQL process. Adding additional load that might take resources away from the database seemed very unwise. We were shard splitting in an attempt to reduce load. We needed to separate those concerns/components so we put the VtTablet on a separate host. This worked very well, and got us through those first few splits where we were balancing getting to smaller shards quickly versus carefully exercising this new (to us anyway) shard splitting technology.

Everything on its own

Everything on its own

There are a few downsides: the VtTablet host uses a lot of network capacity since it sits behind an extra hop. Also, we are forced to encrypt the data going between the VtTablet and the MySQL, which increases the overall CPU usage. Another drawback is managing the 1:1 nature of VtTablet to MySQL. We ended up using part of our Vitess lockserver to lock what VtTablet was running in front of which MySQL. Managing and keeping track of what goes where was rather easy when you have 4 clusters. However, with every split that number doubles so everything becomes increasingly difficult to track.

Generated using fontmeme.com

Generated using fontmeme.com

Fast forward a few months, and we’ve kept on splitting. Finally, our databases are down to a much more reasonable size and there are a lot more of them. The app, VtGate, VtTablet and MySQL processes are all on their own hosts. The Vitess processes are running at a fraction of host capacity so they can run beside other apps¹. We keep asking our hardware team for ever more hosts. The question that was asked ever more frequently was how could we save on CPU and/or utilize the hardware more fully. We even got to a point where we were running more VtGate hosts than app hosts.

Now that we had seen how those Vitess processes are running at our load we started to rethink the decision to keep all of these things separated. Profiling confirmed the bulk of request time was spent shuffling data around, either dealing with TLS or marshalling/unmarshalling packets. Another problem we had was our driver had some issues adapting when a VtGate was inaccessible for example during VtGate deploys.

“Sidecar” by cireremarc is licensed under CC BY 2.0

“Sidecar” by cireremarc is licensed under CC BY 2.0

Given those problems and hardware constraints we began to bring those components back together. We start running those components as separate processes on the same machine in a sidecar process configuration. We save on TLS by no longer spending CPU encrypting. By using local sockets or file descriptors to communicate we save on CPU packet handling. Our first concern was all those VtGates. It seemed ridiculous that there were more VtGates than actual app machines. This was quickly accomplished by using a local, host only, port for traffic between the app and VtGate so TLS was no longer necessary.

It was time to revisit the isolated VtTablet. If we were to host the VtTablet on the same machine as the MySQL we would need more MySQL hosts, however, we could return all the hosts that were currently running as separate VtTablets. This migration is in progress. As the list of VtTablets has grown longer, we have run into several instances where it has been a challenge to keep that 1:1 mapping of VtTablets to MySQLs straight. A great side effect of running the VtTablet and MySQLs together is that it becomes obvious to the VtTablet which MySQL it is paired to. All that lockserver code goes to the trash, hurrah! So it is awesome to finally have that solution in the works.

Friends forever

Friends forever

The whole exercise in retrospect seems to have been a bit of a circle. However pulling Vitess apart helped us reduce our risk when first deploying and using Vitess. The push to sidecar has made Vitess easier to manage and reduced our hardware cost. It may offend my DRY (Don’t Repeat Yourself) principled brain, but by taking a step back before moving forward we have been able to continue to deliver an experience to a greater number of customers over time. A great thing about software is if you break something apart, it is often not that hard to piece it back together again later.

This post is part of Square’s Vitess series.

¹ This was because at the beginning it was easier to get portions of several hosts rather that a smaller set of whole hosts.