Instrumenting Firebase Test Lab

Automated testing with Firebase Test Lab at Square

At Square, we rely heavily on automated testing to ensure that our applications work well. Our Point of Sale Android codebase is just shy of 1.5 million lines of code, and we run about 6000 Android instrumentation tests on Firebase Test Lab on every pull request to prevent regressions. This gives our engineers the ability to make deep changes (e.g. migrating from MVP to Workflows) with a lot of confidence.

In this blog post, I share a few utilities we built on top of Firebase Test Lab, I hope you'll find them useful!

"Check device logcat for details"

Consider the following test:

@Test

fun displayViewOnClick() {

onView(withId(R.id.button))

.perform(click())

onView(withId(R.id.displayed_view))

.check(matches(isDisplayed()))

}

This test runs from the instrumentation thread, however most of the work happens on the main thread. All the Espresso checks and actions are blocking calls that will schedule work on the main thread and block the instrumentation thread until that work has produced the expected results.

If a test assertion fails (e.g. a view is missing), the assertion throws an exception from the instrumentation thread, which is caught by the Android junit runner and reported back as a failure (see InstrumentationResultPrinter#testFailure).

However, if an exception is thrown and uncaught on any other thread, then the default exception handler will kill the process (learn more here). The test result will show the dreaded:

Test failed to run to completion. Reason: 'Instrumentation run failed due to 'java.lang.RuntimeException''. Check device logcat for details

This message isn't useful, and scrolling through logcat when running thousands of tests in CI is not a pleasant experience.

I was inspired by what we did in LeakCanary to detect leaks in instrumentation tests and created CrashingRunListener (full source here): a JUnit RunListener that detects uncaught exceptions in instrumentation tests and reports a failure status to Instrumentation before letting the default crash handling continue (which terminates the process).

Thread.setDefaultUncaughtExceptionHandler { thread, throwable ->

reportTestFailure(

"Instrumentation test failed due to uncaught exception in thread [${thread.name}]:\n" +

Log.getStackTraceString(throwable)

)

defaultHandler.uncaughtException(thread, throwable)

}

You can add that run listener to defaultConfig in build.gradle then run ./gradlew app:connectedCheck like you normally would:

android {

defaultConfig {

// ...

testInstrumentationRunner "android.support.test.runner.AndroidJUnitRunner"

testInstrumentationRunnerArgument "listener", "com.example.CrashingRunListener"

}

}

When using Firebase Test Lab you can instead pass runner arguments with the --environment-variables flag:

gcloud firebase test android run \

…

--environment-variables listener=com.example.CrashingRunListener

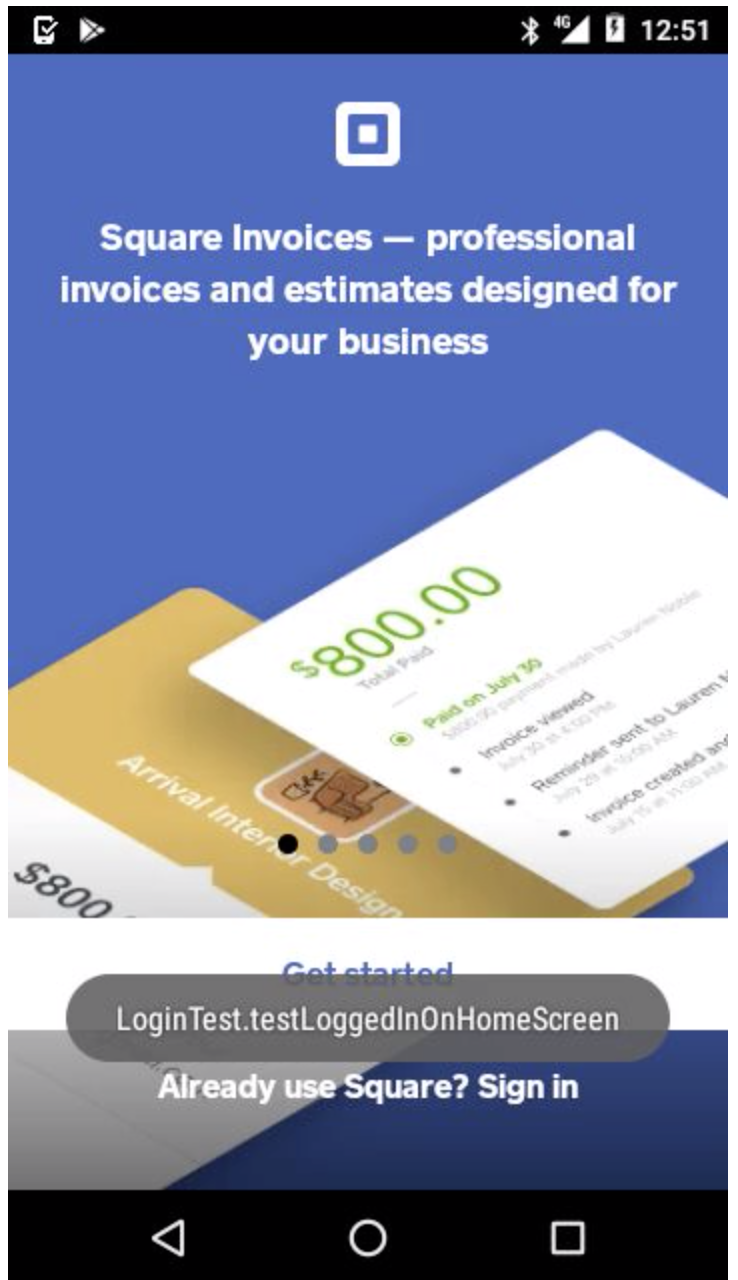

Toasted video

Firebase Test Lab records a video of the test run, which can be helpful when trying to understand why a test failed. Unfortunately, we get one video with all the tests for a given run, so it can be hard to identify which part of the video corresponds to our test. A quick way to help with that was to add a toast with the name of the test at every test start:

package com.example

import android.widget.Toast

import com.squareup.instrumentation.framework.Instrumentations.getTestAppContext

import com.squareup.instrumentation.framework.Instrumentations.runOnMainSync

import org.junit.runner.Description

import org.junit.runner.notification.RunListener

/**

* Toast the name of each test to the screen to make the test easier to identify in a

* Firebase video stream.

*/

internal class ToastingRunListener : RunListener() {

override fun testStarted(description: Description) {

val testName = description.displayName

runOnMainSync {

Toast.makeText(getTestAppContext(), testName, Toast.LENGTH_LONG)

.show()

}

}

}

The syntax to add several listeners requires passing a comma separated list, which means we have to change the default gcloud separator to not be comma (with ^:^):

gcloud firebase test android run \

…

--environment-variables "^:^listener=com.example.CrashingRunListener,com.example.ToastingRunListener"

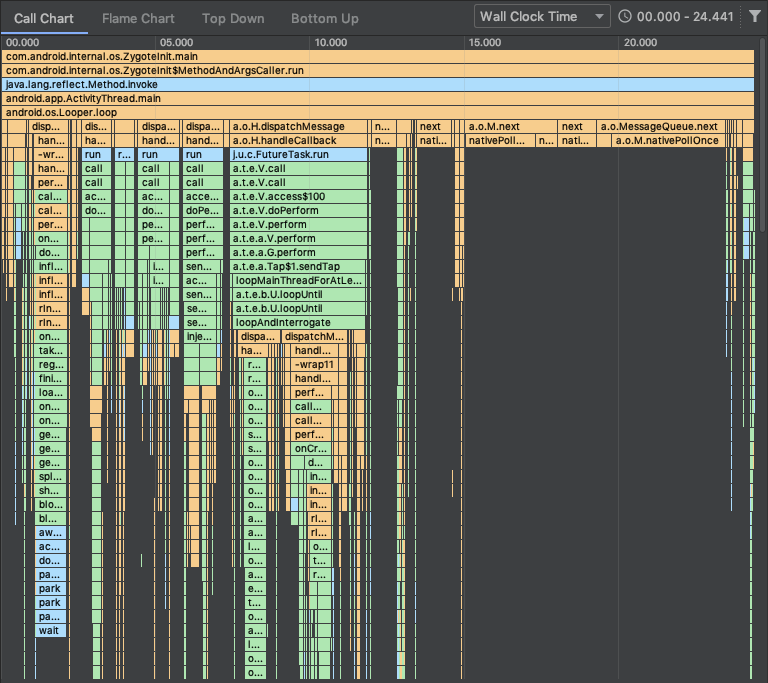

Tracing UI tests

Sometimes the video isn't enough, and we need to understand exactly what the code is doing, across several threads. For that, we created a @TraceTest annotation and a TracingRunListener (full source here) that will capture a detailed trace which we can then open in Android Studio:

@TraceTest

@Test

fun displayViewOnClick() {

onView(withId(R.id.button))

.perform(click())

onView(withId(R.id.displayed_view))

.check(matches(isDisplayed()))

}

override fun testStarted(description: Description) {

if (description.getAnnotation(TraceTest::class.java) != null) {

Debug.startMethodTracingSampling(description.traceFile, BUFFER_SIZE, INTERVAL_MICROS)

}

}

gcloud firebase test android run \

…

--environment-variables "^:^listener=com.example.CrashingRunListener,com.example.ToastingRunListener,com.example.TracingRunListener"

Looping flaky tests

We regularly investigate flaky tests to increase trust in our test suite, and to investigate a failure we need to reproduce it. Unfortunately, by definition, a flaky test will not fail 100% of the time. So one approach we've found is to repeat the test until it fails, with a simple test rule that we apply to all tests:

import androidx.test.InstrumentationRegistry

import org.junit.rules.TestRule

import org.junit.runner.Description

import org.junit.runners.model.Statement

import timber.log.Timber

/**

* A [TestRule] that will repeat each test repeatTestCount times if the test run was started with a

* `repeatTestCount` test runner argument.

*/

class TestRepeatRule : TestRule {

override fun apply(

base: Statement,

description: Description

): Statement {

val args = InstrumentationRegistry.getArguments()!!

val repeatTestCount =

if (args.containsKey("repeatTestCount")) args.getString("repeatTestCount")!!.toInt() else 0

return if (repeatTestCount > 1) {

object : Statement() {

override fun evaluate() {

for (i in 1..repeatTestCount) {

Timber.d("Repeating test, iteration %d of %d", i, repeatTestCount)

base.evaluate()

}

}

}

} else {

base

}

}

}

We can then pass the repeatTestCount argument as an environment variable:

gcloud firebase test android run \

…

--environment-variables "^:^listener=com.example.CrashingRunListener,com.example.ToastingRunListener,com.example.TracingRunListener:repeatTestCount=20"

That's it for now! I'd love to see what other engineers are building to instrument UI tests!