Incident Summary: 2017–03–16

Authors: Erik Bourget, Toby Reyelts, and Xavier Shay

Authors: Erik Bourget, Toby Reyelts, and Xavier Shay

On March 16, beginning at 10:02 a.m. PDT, Square’s serving infrastructure experienced a service disruption. Most of Square’s products and services were affected in this outage, including payment processing, Point of Sale, Dashboard, Appointments, and Payroll. At 11:55 a.m. PDT, service was restored for all services except SMS delivery of two-factor authentication codes. SMS recovered at 1:12 p.m. PDT.

This postmortem document aims to communicate the root cause of this outage, document the steps that we took to diagnose and resolve the outage, and share actions that we are taking to ensure that we are properly defending our customers from service interruptions like this in the future.

This is our engineering-focused timeline. Please see https://www.issquareup.com for a high-level overview.

Incident Summary: 2017–03–16 ALERT-2791 Widespread Service Outage

Root Cause: Merchant authentication service unrecoverably overloaded due to bug in adjacent service

Impact: Service outage for all merchants between 10:02AM-11:55AM; 2FA outage until 1:12PM

Timeline (Pacific)

What happened?

9:55 We began rolling out changes to “Roster,” the service that handles customer identity, in a single datacenter.

Roster (and our other critical systems) are active-active in multiple datacenters. It is normal procedure for us to deploy continuously throughout the day, and overall we deploy our various services over 250 times per day to production.

10:02 “Multipass”, our customer authentication service, began reporting timeouts to oncall engineers. This backend service is critical to most of Square’s core product services.

10:02 Beginning of impact

10:06 Roster paged an oncall engineer.

10:08 Our external monitoring notified us that api.squareup.com was down.

10:08 Severe impact began

10:10 Engineers from across Square began reporting that services depending on merchant identity were experiencing failures. It was clear to us at this point that this was a major outage. We executed a few standard operating procedures:

-

We conferenced engineers across offices.

-

We rolled back all software changes that happened leading up to the incident. This is a non-negotiable response to any customer-impacting event; our engineers are trained to undo any change that happened before an incident regardless of how plausible it is that the change caused the issue.

-

We activated our “Crisis team” — a cross-functional group of leaders — to ensure that our complete disaster response plan was followed.

-

We updated issquareup.com to notify sellers of the disruption, and continued to update the site every 15 minutes until we reached full resolution

10:23 Roster completed rollback. We believed that this would have a high probability of resolving the outage, as Multipass has a dependency on Roster and the timing of the Roster deployment lined up with the beginning of issues in Multipass. Unfortunately, this did not resolve the outage. Looking at the data in retrospect, Roster continued to be available for the duration, serving out of other datacenters, as designed.

10:25 We shut Multipass off and turned it back on. Our theory was that Roster put Multipass into a bad state and that restarting it would restore service. This theory was proven false.

[Support] 11:10 We noticed our status page was loading slowly, and immediately contacted our status page vendor. They updated their status page to explain that their databases were under heavy load, and were actively working on a resolution.

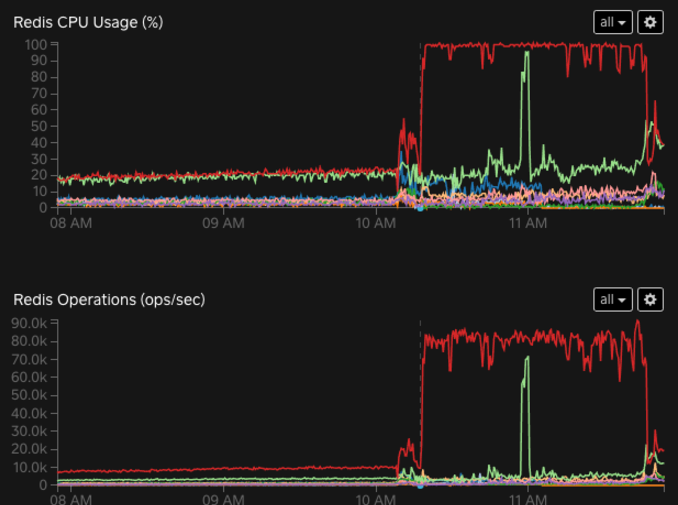

[Engineering] 10:30–11:30 We split into a few workstreams of diagnosis, testing theories, and tweaking tunables in our systems. Our engineers discovered that Multipass’s Redis database had peaked out on capacity — suddenly and unexpectedly:

We used both Redis SLOWLOG and MONITOR to determine what operations were hitting Redis hardest. We then traced them back to a specific code path in Multipass which retries a Redis optimistic transaction. We determined the retries had a high upper bound (500) and no backoff causing a harmful feedback loop, and started working on a fix.

Though this was promising, our engineering processes dictate that we do not stop exploring solutions until we have a confirmed fix. We began identifying key services and gave them full access to the machines that they were running on. Square hosts multiple services on each of its servers and uses Linux cgroups to isolate them from each other. This limits the amount of CPU and memory that a given service is able to use on a machine. This had the effect of giving services critical to our payment processing flow the maximum possible priority on our compute resources.

By 11:45, our fix was written, reviewed, built, and tested.

11:48 West coast datacenter Multipass deploy providing the ability to reduce retries on Redis transactions.

11:48 multipass-redis immediately recovered as the improvement rolled out. Payments began to flow normally!

[Support] 11:56 Our status page vendor updated us that a fix for their load issues had been released. However, our status page was functioning normally for a large percentage of visitors during the timeframe that they were experiencing load problems.

11:59 East coast datacenter Multipass deploy.

12:00 Japan datacenter Multipass deploy.

12:00 Logins were back up.

At this point, we began exercising all of our systems to validate that service had truly restored. We discovered that one issue remained — over the course of testing our signout/sign-in flow, we realized that two-factor authentication codes were not being sent to customers who had enabled 2FA. This was the result of a rush of SMS messages caused by the sudden rush of logins to our system. We contacted our SMS vendor and worked to remove the bottleneck in sending SMS. With them, we determined that a rebalancing of our outbound SMS phone numbers would increase capacity here.

12:32 We began adding new SMS numbers to the pool.

12:42 We cleared old numbers from the pool.

12:46 During testing, an engineer received new 2FA code in ~10s. Recovery is underway.

13:12 SMS 2FA codes began working for everyone.

We gathered information until 4:30PM, at which point we held a post-mortem meeting and discussed what went well and poorly in our response. Overall, our processes for triaging the issue worked as intended, but the thorny nature of this problem meant that they did not drive the quick resolution that we are used to. We feel that improvement in in-app messaging would have benefited our customers. We experienced some small delays in triage due to the current setup in our workroom. We took several action items, listed below, that we believe will address these issues. Bolded items directly remediate the technical root cause of the outage. Items not in bold are opportunities that we identified to improve the resilience of our systems and efficacy of our processes.

Action Items

Merchant Identity team:

-

Determine if the single datacenter Roster outage exacerbated Multipass-Redis retries and if there are additional dependencies we need to break between Multipass and Roster.

-

Identify other areas where retry logic is used and audit them to ensure that they aren’t vulnerable to the same flaw.

-

Audit cgroup constraints to ensure that the limits we place on our applications are correct and reasonable.

Payments team:

-

Meet with adjacent teams to re-affirm SLAs between services.

-

Meet with iOS/Android Point of Sale teams to discuss more effective in-app messaging of outages.

Infrastructure team:

-

Write a redis runbook based on outage to guide redis troubleshooting. We learned some lessons about apps interacting with Redis during the outage and recording this will increase knowledge across engineering.

-

Set up second postmortem for SMS issues. We feel that there is more to explore and learn here.

-

Stock Operations Center conference room with readily-available testing equipment so customer experience can be more easily evaluated from the Operations Center during an outage. Our standard operating procedure of engineers using their own devices to test during an outage was stretched during this one. Dedicated test stations will make debugging and verifying progress simpler.

Support team:

- Follow up with status page vendor to improve issquareup.com reliability.