Human Botnet Part 1

Scaling application security with metrics and automation.

Written by Tom Daniels.

This is the first in a series of posts from the Square Information Security Team. Over the course of these posts, we’ll detail some of the initiatives we’ve undertaken in order to scale security as the organization grows while still managing our risk effectively.

Over the last few years, Square has grown dramatically both in the number of services we offer as well as the size of the engineering organization. As these numbers grew, it became obvious that manually testing and monitoring every release of every service would be impossible. Scaling security to support our growing engineering team has therefore been a primary goal of the Application Security Team since February. In that time, we’ve focused mainly on two things:

-

Collecting metrics to help us identify where we should be focusing our resources.

-

Automating vulnerability detection where appropriate to allow us to prioritize our focus.

Reporting

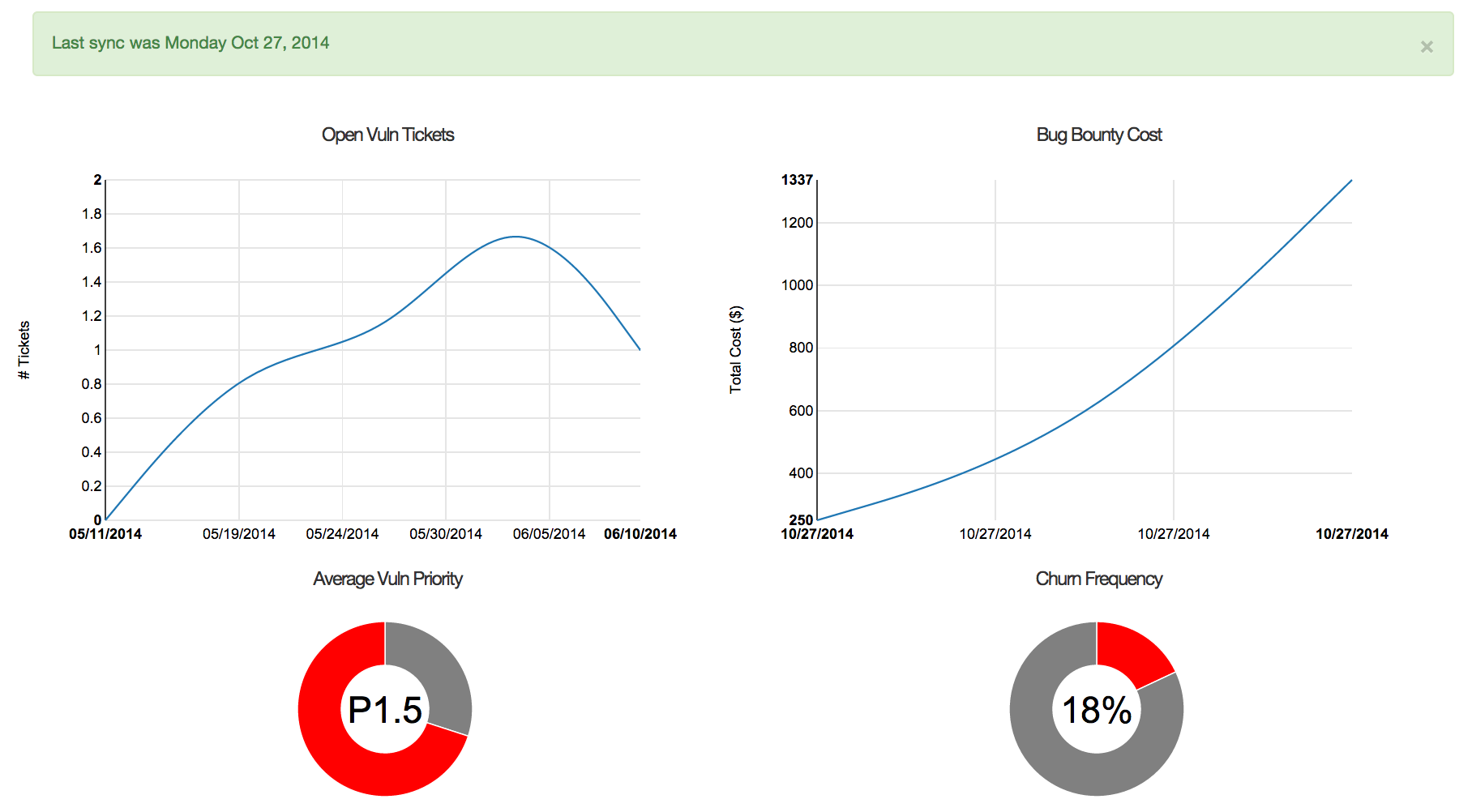

We can’t effectively manage risk that we don’t know about. Therefore our first goal was to aggregate relevant metrics so we can integrate and contextualize disparate data sets. We collect both hard and soft metrics and pool them by project, allowing us to assess the risk of a project as the sum of its parts. A few examples include:

-

vulnerability statistics from JIRA

-

bug bounty data from HackerOne

-

code churn and code quality metrics from git and CodeClimate

-

data on how closely we have been working with a specific team

Having all of these metrics in one central location gives us insight into how much risk a particular project presents and puts us in a position to detect problems early on. For example, a project with a recent increase in code churn as well as pull requests that were merged without approval (potentially across multiple repositories), would automatically alert us based on the combined increase in these factors. This allows us to effectively prioritize where we spend our time and prevent vulnerabilities in our software before it gets deployed to production.

However, in order to minimize our day-to-day involvement, it is important to evangelize ownership of vulnerabilities to the team leads that oversee projects. Our metrics aggregation assists with this by tracking vulnerability remediation SLAs. The SLA is based on the priority of a given issue, which is driven by the vulnerability class and security zone where is it located. The engineering teams can then be evaluated against their remediation time lines across all of their projects. Similarly, the security team uses the aggregated metrics to identify systemic issues across the organization. By classifying issues using a single bug taxonomy, we can see trends across projects and teams that we then use to drive our engineering efforts. This allows us to stop spot fixing vulnerabilities and instead address the class of problem only once for the entire engineering team as a whole.

Automation

Once we prioritized our efforts, we freed ourselves up to build automation. With the number of applications we support growing every day, we needed a way to ensure that all projects — including those that don’t get a lot of attention from our team — still undergo some baseline of security testing prior to release. So, we built a dynamic analysis framework that allows us to manage all of our automated security scans from a central location. Using the framework we can easily plug in off the shelf scanners, run customized fuzzers, and quickly write one-off detection scripts.

Running all of our scans in one place using this framework lets us easily coordinate scheduling and gives us the added benefit of tying the vulnerability reporting mechanism back into our metrics feedback loop. We can automatically file tickets for vulnerabilities with high signal-to-noise ratios and disregard known false-postives. This minimizes the number of potential issues that require triage by our team before escalating them to the project’s owner. Again, this encourages the engineering team to be responsible for the security of their applications. This is obviously not a sufficient means to find certain classes of issues (e.g., business logic vulnerabilities), but does increase our overall coverage and effectively mitigates regression. This combination let’s us focus design review and manual testing efforts such that we don’t have to spend lots of time on low hanging fruit.

Takeaways

Scaling security with the engineering organization does not happen naturally, but distributing load to engineers and responsibility to team leads helps tremendously. Automating as much as we can so that we can prioritize from a reasonably sized list of concerns allows us to stop carrying stones and start building wheelbarrows. Additionally, automatically assigning issues to the appropriate teams and escalating issues out of SLA for remediation encourages ownership of product security. Finally, tracking metrics and reporting on how well we are performing helps us focus on priorities by quickly identifying where we should be spending our time.

Stay tuned for the next post in this series out next month. We’ll cover how we’re scaling access controls with our single sign-on system, Doorman. Tom Daniels *thirstscolr.*medium.com