Enabling Serverless Applications at Square

Enabling AWS Lambda Functions to call into the Square datacenter’s envoy service mesh

Square and the Cloud

Square is early in its migration to the cloud and most applications run in our datacenters today. The Platform and Infrastructure Engineering (PIE) organization has historically focused on providing infrastructure and tooling to build and operate in the datacenter. These offerings have been limited, due to the small size of PIE relative to the rest of Square. PIE looks to the cloud as an opportunity for engineers at Square to be more empowered to choose tools and architectural patterns that best fit their needs. Our strategy is twofold: firstly, enabling teams to move existing applications to run in the cloud with minimal changes, and secondly providing the tools and infrastructure for teams to architect their applications using cloud native patterns.

Our goal with cloud native development, initially focused on serverless applications, is to not be on the critical path for adoption. There are over 160 engineering teams at Square focused on an extremely wide range of problems, from external facing APIs, to internal web applications, payments processing, voice systems and more.

In the Datacenter

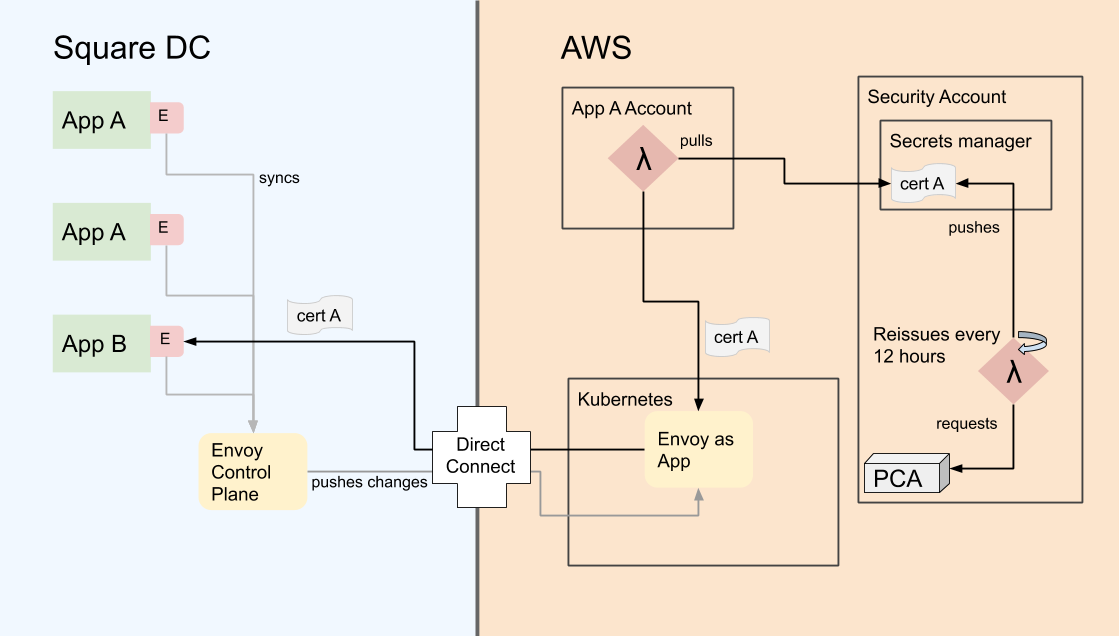

Applications in the Square datacenters communicate via Envoy authenticated by mutual transport layer security aka mTLS. Certificates are generated and rotated automatically on physical hosts. Service to service (s2s) communication is controlled via envoy, which syncs application dependency information from an internal application called Registry. We made the decision early on to treat Lambda functions and deployments in the DC as logical components of the same application. Teams may further split these into separate apps if they choose to

S2s calls in the datacenter rely heavily on automation and configuration. For enabling lambdas to call into datacenter applications, we needed to figure out what tooling could be reused, and what needed to be built. Turns out a lot needed to be built. We chose to approach this with an automation-first mindset, as we knew it would scale better than first creating manual processes and then facing the task of deprecating them.

Getting started in AWS

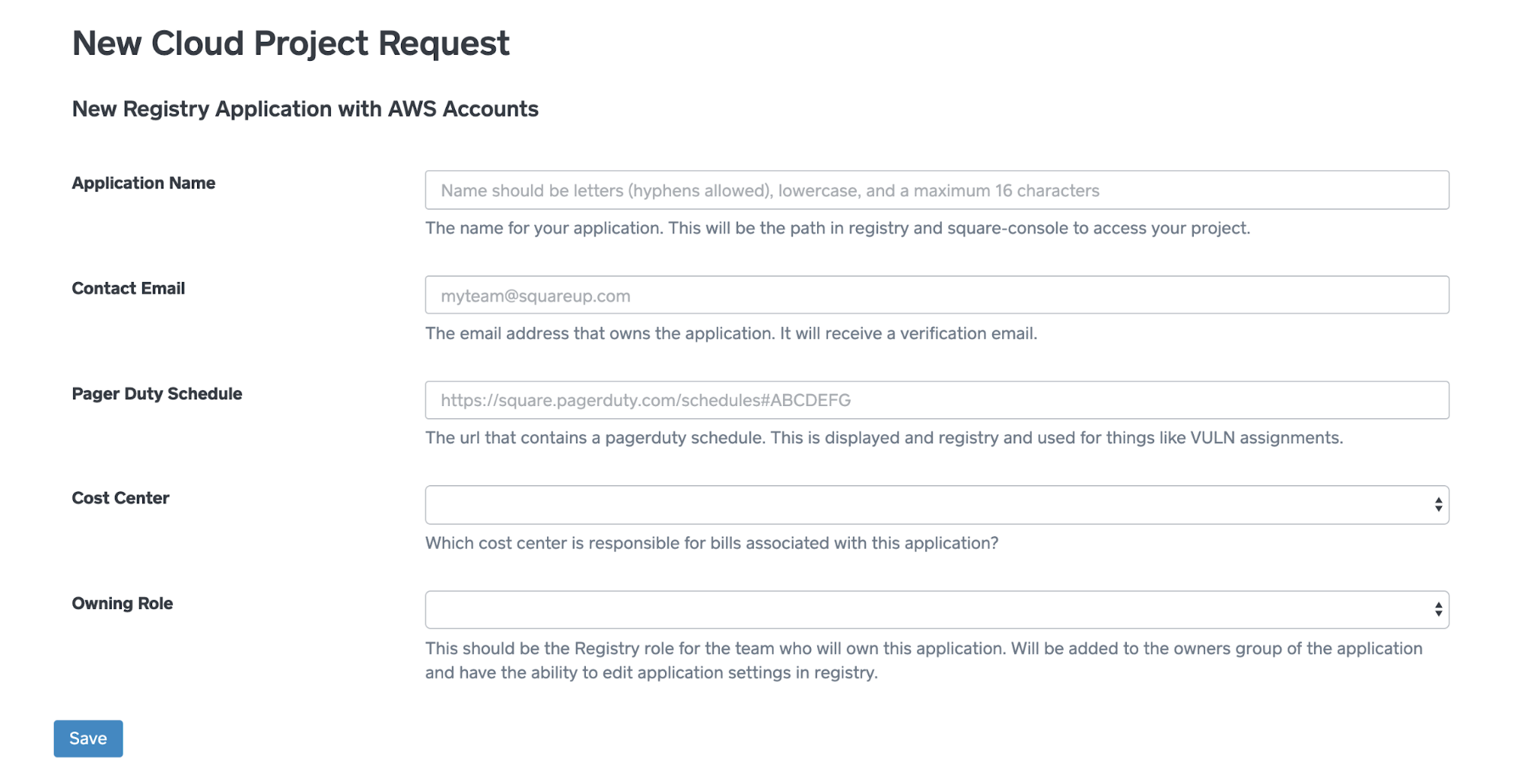

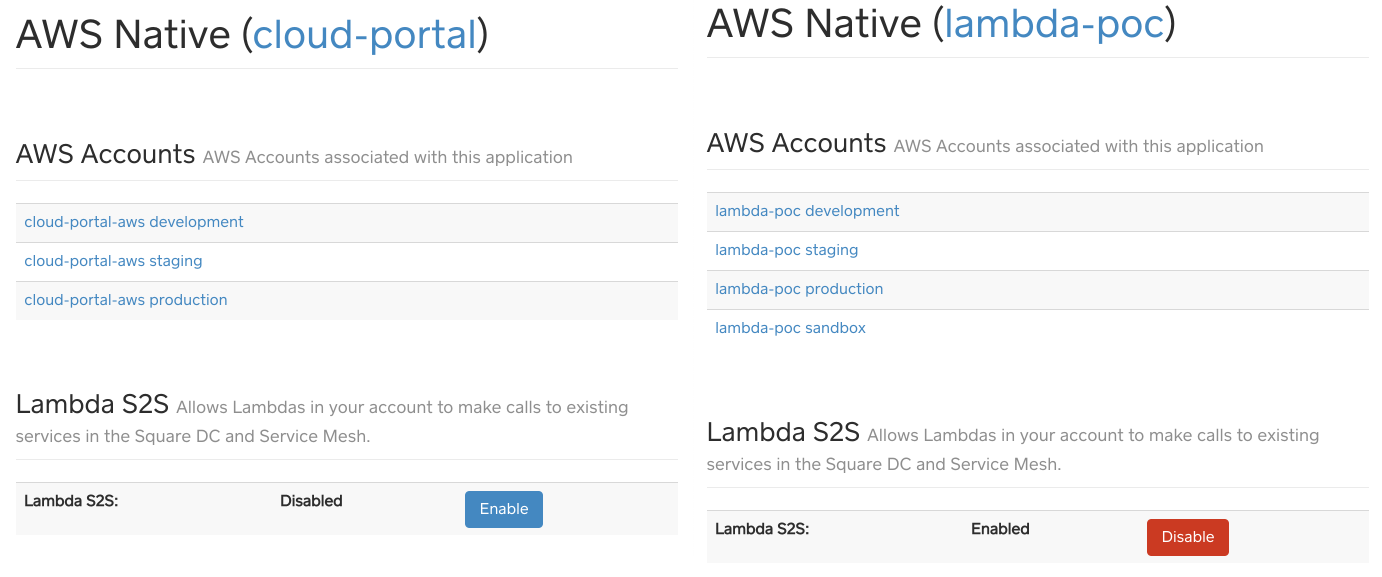

The first hurdles for teams to start building in the cloud are accounts, networking, and setting up infrastructure. The tool to create a new application in the datacenter couldn’t be reused. Instead, the Cloud Foundations team in PIE built an application that allows teams to create development and staging accounts by clicking a button for an existing application, or filling out a short form for a new application. Think of it as an AWS account vending machine. Production and 3rd party developer sandbox accounts require a few internal approvals before creation, which we're also working on minimizing. This means teams will have 3 or 4 AWS accounts per application. We have found the benefits of a multi-account model like this to outweigh the overhead.

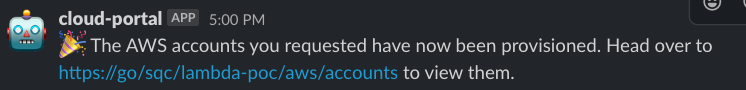

All new accounts are set up by default with subnets in our shared VPCs and a terraform repository hooked up to our CI/CD pipeline. The full account creation process completes within 30 minutes without any human intervention, and developers are notified via slack and email when their accounts are ready.

Developers at Square are not used to creating or managing their own infrastructure in the datacenter. The Cloud Foundations team interviewed internal customers to figure out what tools they would want to manage infrastructure in the cloud. The AWS Console UI is really useful, but relying on it for managing infrastructure doesn’t scale. We landed on Terraform for our infrastructure-as-code solution, which was already in use by some teams at Square.

We built several terraform modules to help configure AWS accounts and lambda functions securely. These modules take care of common concerns, like setting up IAM for our CI system to use, as well as stamping out lambda functions with the correct permissions and conventions needed for s2s to work. Teams use centrally managed terraform CICD pipeline, where the infrastructure changes go through code review like anything else we deploy.

Lambdas and mTLS

We wanted to ensure that lambdas could call into the envoy service mesh and participate as if they were any other application at Square. Specifically we didn't want applications being called from lambda to need to change anything -- it should work the same as any other service call. This involved many moving pieces because all service to service calls use envoy and mTLS. Lambdas needed certificates and a path to envoy. There isn’t a way to have envoy run as a sidecar for a lambda, so we needed to figure out how requests could get to an envoy instance.

Credentials

Every lambda needs TLS credentials (a certificate and private key pair) and a set of root CA certificates to perform mTLS. The root CA certs are added to an internally-accessible s3 bucket available to our AWS organization.

Lambda functions, like all other applications at Square, use their TLS credentials to authenticate to other apps. Both Envoy and the server application verify that the caller is authorized to make API calls based on the identity in the client TLS certificate. This makes the credentials highly sensitive and necessitates access in a least-privileged manner.

We accomplished this in two ways. Firstly, we added metadata to applications in Registry that indicate an application has resources in AWS, and added an off-by-default flag that controls certificate generation. Teams must opt-in to certificate generation in AWS, which avoids generating potentially powerful credentials unless they’re required for an application’s component in the cloud.

Secondly, within AWS, the Security Infrastructure team built a system that checks metadata in Registry, then generates short-lived certificates only for applications that need them. This uses AWS Private Certificate Authority (PCA) under the hood. Each certificate is saved to a central AWS Secrets Manager with a resource policy that restricts which AWS account and role can read it. Lambdas cache these for the life of their short-lived container. Certificates are generated every 12 hours, and are only valid for 24 hours, which limits an attacker’s window if they get stolen or inadvertently leaked.

This is a brief overview of the certificate generation system we built. We will go into more detail in a future blog post.

Into the service mesh

Envoy is also used for service discovery and load balancing. Since lambdas don't have sidecars and all traffic between services is via envoy, we needed an additional piece to route traffic to the service mesh.

We initially tried building a L7 proxy that would re-sign requests coming from lambda, but this would create a single powerful application capable of impersonating the identity of any other app. We considered this risk unacceptable for our security posture. We also looked at deploying an envoy in each AWS account that needed to call into the datacenter, but realized it would be an operational burden for both application teams and our central Traffic team within PIE, as well as increase costs.

We landed on deploying a “mesh gateway” envoy as an L4 proxy that lives in our shared kubernetes cluster, fronted by a network load balancer. The mesh gateway uses SNI headers to forward requests to the requested backend service, but TLS handshakes are still handled by the calling lambda. This allows the lambda to send requests to staging.appname.meshproxy.internaldomain.com, and the mesh gateway routes the request to the correct backend. For us this was the best compromise, where one envoy didn't have access to multiple applications' private keys, operational burden to all teams involved was limited, and the lambdas could still take advantage of envoy features, since an envoy was on the other end of the request.

Lambda Code

With all other infrastructure in place, the lambdas need to use it. This means downloading s2s credentials and performing an mTLS handshake. We knew from talking with internal customers that we needed to support multiple lambda runtimes, initially Ruby and Golang. We also knew we wanted to maintain as few libraries as possible, especially when it came to the code for the mTLS handshake. Because Square has a large technology landscape, the lambda needs custom mTLS logic, and we wanted to avoid duplicating that if we could.

Our solution was a golang package that retrieves and caches certificates, and handles the mTLS logic within the lambda function. Any lambda that uses the go runtime can import that package directly. For other languages we distribute a binary lambda layer to our organization. That layer creates a reverse HTTP proxy which under the hood uses the same code as a go http client would.This allows mTLS code to live in only one place. For other languages, we developed libraries that start the binary as a background process and vend correctly configured http clients for lambdas to use. These language specific libraries are much smaller than the go package, which makes maintaining them and accepting contributions from our internal developer community much easier.

Everything we’ve built to run inside a lambda relies on normal libraries, not any of the available serverless development frameworks. We aim to be framework agnostic so that teams can choose what best fulfills their product, security, and timeline requirements.

Conclusion

This combination of tooling and infrastructure gives teams the freedom to setup their AWS accounts however they would like. The tooling, specifically AWS accounts from a virtual vending machine and terraform CI/CD pipelines, ensures that accounts are setup and managed in a consistent manner. The infrastructure, with a separate AWS account that generates certificates and envoy running as an application in kubernetes, creates a secure traffic flow from lambda to the datacenter.

This concludes the overview of what we built to support service to service calls originating from AWS lambda. It was a large, cross-functional effort with contributions from 8 teams within Square. We will be writing a few more blog posts going into more detail on some of these topics in the coming weeks.