Ecosystem Success Metrics

Navigating tradeoffs between different products

Square has an integrated ecosystem of over 35 products for sellers (including Online Store, Square Payroll, Invoices, Square for Retail, Loans, and more). For each individual product, there are focused success metrics to prioritize for different product milestones (e.g. free trial starts, first revenue event, etc).

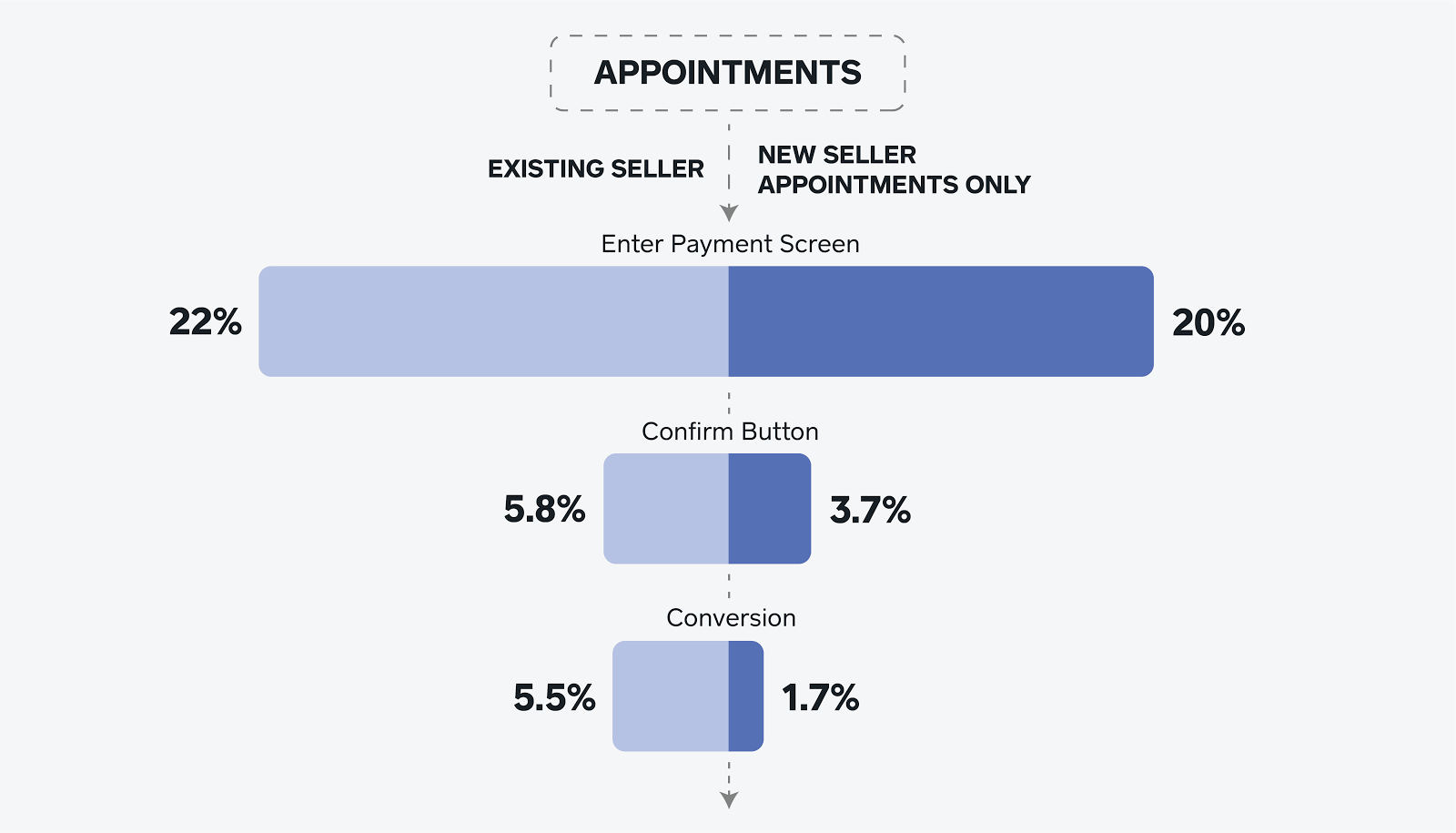

However, for shared spaces such as our public web page (squareup.com), our general web signup flow, and our internal seller dashboard, we often have to make decisions that involve a tradeoff between different products. For example, during onboarding, we can suggest certain products over others, so the question becomes: how do we prioritize them? What is our criteria for making tough decisions between products? How do we measure the impact of a specific change to the overall ecosystem? Our strategy is to get the right fit for sellers - but there are many different ways to measure this with different pros and cons. Let's take a look at some possible ecosystem success metric approaches:

Option 1. No standardized ecosystem success metrics policy.

Success metrics function as a target to optimize, which enables teams to set clear goals, align with broader strategy, and iterate rapidly. Without a clear overarching target, each product use case would be evaluated independently and change depending on the specific situation. Granted, there might need to be some policy even if it's just experiment change categorization (i.e. a required 'do no harm' backend change is a different situation than a major 'metric mover' experiment) or funnel stage/product life cycle targeting (i.e. is the goal product conversion, retention, etc).

Pros:

- Adaptability. As the company's goals evolve, we can adapt our experimentation strategy without much process.

- Flexibility. Metrics can be tailored to the situation. Having the flexibility to choose the appropriate metric for the experiment would give teams the ability to more directly measure the impact of their experiments, and in some cases, move more quickly.

Cons:

- Alignment Speed. Needing approval for every experiment can slow down the pace of authorizing experiments (and sometimes finalizing decisions) significantly.

- Consistency. Each experiment having different goals may make it harder to have an overarching theme to the direction of new features.

- (Human) Bias. Without a policy, each situation might be affected by whoever argues the loudest - i.e. 'the squeaky wheel gets the grease.'

- Effort. This puts the work to align on success metrics on each individual situation.

Option 2. Revenue or gross profit (with some date cutoff).

This is probably the most common solution, as it is 'product indifferent' and prioritizes products with more revenue. There are definitely benefits to using actual revenue, but there are some downsides that aren't immediately apparent. In an ideal world, we would know a customer's full lifetime revenue beforehand with certainty, i.e. we would know the future. However in practice it can be very difficult to predict early on which customers are going to provide a higher lifetime value. Some customers will do a trial run just to see how a product works and not engage further. Other customers have already done their research and might dive in right away. Higher value products for larger businesses can also have longer onboarding times. If we have too short a date cutoff for revenue, we start to prioritize products that lead to revenue faster, not necessarily the ones which provide higher retention and long term value. If we wait for revenue to cure more fully, it hampers our ability to make decisions quickly.

Pros:

- Practicality. Businesses need revenue to survive in the long term, so usually this aligns well with a company's financial targets.

- Clarity. It is very clear that the highest revenue product takes priority, making alignment and breaking ties pretty straight-forward.

- Reliability. This metric tends to be already established as it's something that needs high accuracy in measurement for the company.

- Side note: revenue makes a great safeguard metric, so we're not just converting people without checking if their engagement deepens after their initial start.

Cons:

- Short-term bias. Products that are faster to start generating revenue will take priority over products that might have higher long term value, but have a longer ramp up time. There is often a tradeoff between short term and long term revenue.

- As an example: we can increase short term revenue by simply raising our prices, but that can burn a lot of trust very quickly and hurt our brand for the long term.

- Strategic growth alignment. Revenue metrics tend to favor established, larger products. New products tend to always fall behind simply because they are smaller, even if they are quickly growing and a high level strategic investment for the long term. It's hard to increase the engagement of new products if you don't invest in them early on and take on some risk.

- Another way this can hurt a business is through de-prioritizing products that cause indirect revenue or enable other products down the road.

- Goodhart's law: "When a measure becomes a target, it ceases to be a good measure." Our core goal is to build great experiences with great products, revenue is a byproduct of engagement and value. When we only target revenue, we start to think that revenue is the same as good experiences—because people use our products, right? It can result in us losing sight of the customer experience or investing in new ideas that add delight vs fast dollars. “We don't make movies to make money. We make money to make more movies.” - Walt Disney.

Option 3. Use a forecasting model.

What if we forecast long term value (LTV) and optimize based on that model? This way we can bake in any issues with longer ramp up time, acquisition costs, biasing towards large products, etc.

Pros:

- Long term focus. The model can be adjusted to focus on long-term value and prioritize early signals that result in long term success. Important note: predicted value is not the same as actual value.

- Adaptability. Machine learning engineers (MLEs) can improve this model and adjust to strategic changes to keep us moving towards high level goals and updated knowledge about customers.

Cons:

- Speed (potentially). Models take time to cure data, and can often be very noisy early on. This can make iterating quickly very difficult.

- Investment. There needs to be some investment in building out a model that is effective at predicting long term value with early signals.

- Risky. This is projected revenue, not actual revenue, along which comes risks of optimizing for a model that doesn't actually reflect reality in the future. Some additional considerations:

- Low control. Shifts in the model could change outcomes more than intentional product changes. Changes could happen mid-experiment - imagine targeting a model and half-way through curing the data, the target moves. This can be very frustrating. Granted, there can be some safeguards around this in place.

- Validation is tricky. There needs to be some accountability regarding the predicted value actually delivered, which takes time and consistent monitoring. Similar to Goodhart's law described in Option 2 above, if we start targeting a model, it'll start to reinforce its own accuracy by targeting the things it uses for inputs. For example, if the model targets a certain magic moment as a prediction, and product teams maximize that moment, it might lose its value in prediction. We also don't want to ever get into a situation where people confuse predicted value as actual value, which can send us down a very risky path.

- Complexity. This is a big one. If the model is difficult to explain it becomes difficult to target.

- Unknown variance. Using model outputs adds additional uncertainty associated with the error of the model that is not accounted for in the p-value (as typically calculated).

- Hypothetical, changing, potentially volatile values. Long term value changes depending on current conditions and the impact of decisions. For example, driving sellers to new products will affect the marginal value of those sellers.

- Human error. The model will be maintained by MLEs, so there's room for error, bugs, mistakes, more so than revenue.

- Availability Bias. Older, more established products tend to have more domain knowledge and data around them than newer products, so there is a potential unevenness of knowledge across products.

Option 4. Prioritize products directly by creating product tiers.

What if we just ask executives for what to prioritize? They could consider financial investment, the total addressable market (TAM), partnerships, etc and just give a list of ecosystem product priorities (e.g. a list of products to not tolerate significant revenue loss, and a list to assign to a lower tier, etc). Alternatively, we could have multiple product tiers with different criteria. There are a few different approaches here, but it is essentially handing top down priorities for tactical decision making for product teams.

Pros:

- Transparency. Clear product strategy and decision-making priorities.

- Low effort. Teams are being told what is important, and they just execute that strategy. Alignment is easier when teams get a clear top down decision.

Cons:

- Subjective. This is not a very data-driven decision, and there is a risk of being way off base and poor strategy being caught very late.

- Bottlenecking from unclear criteria. We are essentially pushing the decision-making away from broader metrics and more on leadership directly. As part of that, we would need more and more information from leadership, such as clarifying how a product moves to a different tier, etc.

- Product exclusion. Some products may have potential and get overwritten due to leadership not measuring the right areas, which might prevent some organic growth with a different target and may hurt the ecosystem in the long term.

Option 5. Some combination of actual metric and predictions.

We could take an early metric, like a product's first revenue generating event, and multiply it by some hard-coded value estimate (updating the value estimate every now and then). This is arguably an alternate version of option 3 (forecasting model), but with a bit more simplicity. This effectively allows us to align with long term product strategy in a more data informed way than in the direct top down leadership approach of Option 4.

Pros:

- Higher control. There is a tradeoff between product adoption (a binary 1/0) and revenue (which can have high variance) - this method provides some of the autonomy of product adoption, with the longer term estimate of revenue.

- Speed. We don't have to wait as long as revenue to cure, but there needs to be a product adoption time frame for practical purposes.

- Strategic / data informed. More holistic product picture of how to prioritize products with a long term view. Relatively easy to update.

Cons:

- Interpretability. This is not really revenue, a model, or an adoption metric, but sort of a combination. A lot depends on the value estimates, and having secondary metrics for insights will be pretty important.

- Maintenance. Some aspects will need to be maintained (e.g. if estimated values change, new products are added, etc). There are also some decisions that need to be made about components here and would need to update with new information.

- Individual variance. Any time we restrict a value to a set amount for everyone with a certain behavior (e.g. using a product conversion event x expected revenue as a success metric), we often fall to the "tyranny of averages."

Option 6. Some product specific metric.

This depends on which products are in our ecosystem, but we could target a specific product adoption, engagement, or churn event. The broader the ecosystem in terms of customer value, the harder it is to find one metric, but it can work for a time.

Pros:

- Simplicity. Bottom of funnel metrics tend to be easy to measure and understand, which can bring a lot of clarity to teams.

- Autonomy. A quantitative metric is usually easier to move, by reducing dropoff, increasing customer acquisition, etc. It can create alignment around a target that can actually be moved.

Cons:

- Favoritism. Similar to the core issue here of product tradeoffs, the more complex the ecosystem, the harder it is to find a product adoption event that works across products which is fair to products that take longer to onboard, etc.

- Quality is missing. Measuring conversion is pretty straight-forward, but it ignores quality of engagement for a product - since a 1/0 adoption metric doesn't factor in if someone generates $1 or $1M of revenue.

- Flexibility. Optimizing for a specific product event makes it more difficult to have a more creative ecosystem and forces one type of product to become the limits of the ecosystem. This can hinder the growth in breadth of the ecosystem over time.

Regardless of which ecosystem metrics a product team or company settles on as a north star, the important thing is to know the limitations of these metrics and use cases. There are tradeoffs for any choice. And to reiterate, metrics are not a replacement for strategy. Ultimately the goal is to add value to customers via the product ecosystem, any success metric should be a measurement of progress towards that goal and is valuable only in its ability to help achieve that goal.

We are hiring at Square for Data Scientists!

Authored By

- Option 1. No standardized ecosystem success metrics policy.

- Option 2. Revenue or gross profit (with some date cutoff).

- Option 3. Use a forecasting model.

- Option 4. Prioritize products directly by creating product tiers.

- Option 5. Some combination of actual metric and predictions.

- Option 6. Some product specific metric.